瞭解神經網路原理的同學們應該都知道,隱藏層越多,最終預測結果的準確度越高,但是計算量也越大,在上一篇的基礎上,我們手動新增一個隱藏層,程式碼如下(主要參考自多層感知機 — 從0開始):

from mxnet import gluon

from mxnet import ndarray as nd

import matplotlib.pyplot as plt

import mxnet as mx

from mxnet import autograd

def transform(data, label):

return data.astype('float32')/255, label.astype('float32')

mnist_train = gluon.data.vision.FashionMNIST(train=True, transform=transform)

mnist_test = gluon.data.vision.FashionMNIST(train=False, transform=transform)

def show_images(images):

n = images.shape[0]

_, figs = plt.subplots(1, n, figsize=(15, 15))

for i in range(n):

figs[i].imshow(images[i].reshape((28, 28)).asnumpy())

figs[i].axes.get_xaxis().set_visible(False)

figs[i].axes.get_yaxis().set_visible(False)

plt.show()

def get_text_labels(label):

text_labels = [

'T 恤', '長 褲', '套頭衫', '裙 子', '外 套',

'涼 鞋', '襯 衣', '運動鞋', '包 包', '短 靴'

]

return [text_labels[int(i)] for i in label]

data, label = mnist_train[0:10]

print('example shape: ', data.shape, 'label:', label)

show_images(data)

print(get_text_labels(label))

batch_size = 256

train_data = gluon.data.DataLoader(mnist_train, batch_size, shuffle=True)

test_data = gluon.data.DataLoader(mnist_test, batch_size, shuffle=False)

num_inputs = 784

num_outputs = 10

#增加一層包含256個節點的隱藏層

num_hidden = 256

weight_scale = .01

#輸入層的引數

W1 = nd.random_normal(shape=(num_inputs, num_hidden), scale=weight_scale)

b1 = nd.zeros(num_hidden)

#隱藏層的引數

W2 = nd.random_normal(shape=(num_hidden, num_outputs), scale=weight_scale)

b2 = nd.zeros(num_outputs)

#引數變多了

params = [W1, b1, W2, b2]

for param in params:

param.attach_grad()

#啟用函式

def relu(X):

return nd.maximum(X, 0)

#計算模型

def net(X):

X = X.reshape((-1, num_inputs))

#先計算到隱藏層的輸出

h1 = relu(nd.dot(X, W1) + b1)

#再利用隱藏層計算最終的輸出

output = nd.dot(h1, W2) + b2

return output

#Softmax和交叉熵損失函式

softmax_cross_entropy = gluon.loss.SoftmaxCrossEntropyLoss()

#梯度下降法

def SGD(params, lr):

for param in params:

param[:] = param - lr * param.grad

def accuracy(output, label):

return nd.mean(output.argmax(axis=1) == label).asscalar()

def _get_batch(batch):

if isinstance(batch, mx.io.DataBatch):

data = batch.data[0]

label = batch.label[0]

else:

data, label = batch

return data, label

def evaluate_accuracy(data_iterator, net):

acc = 0.

if isinstance(data_iterator, mx.io.MXDataIter):

data_iterator.reset()

for i, batch in enumerate(data_iterator):

data, label = _get_batch(batch)

output = net(data)

acc += accuracy(output, label)

return acc / (i+1)

learning_rate = .5

for epoch in range(5):

train_loss = 0.

train_acc = 0.

for data, label in train_data:

with autograd.record():

output = net(data)

#使用Softmax和交叉熵損失函式

loss = softmax_cross_entropy(output, label)

loss.backward()

SGD(params, learning_rate / batch_size)

train_loss += nd.mean(loss).asscalar()

train_acc += accuracy(output, label)

test_acc = evaluate_accuracy(test_data, net)

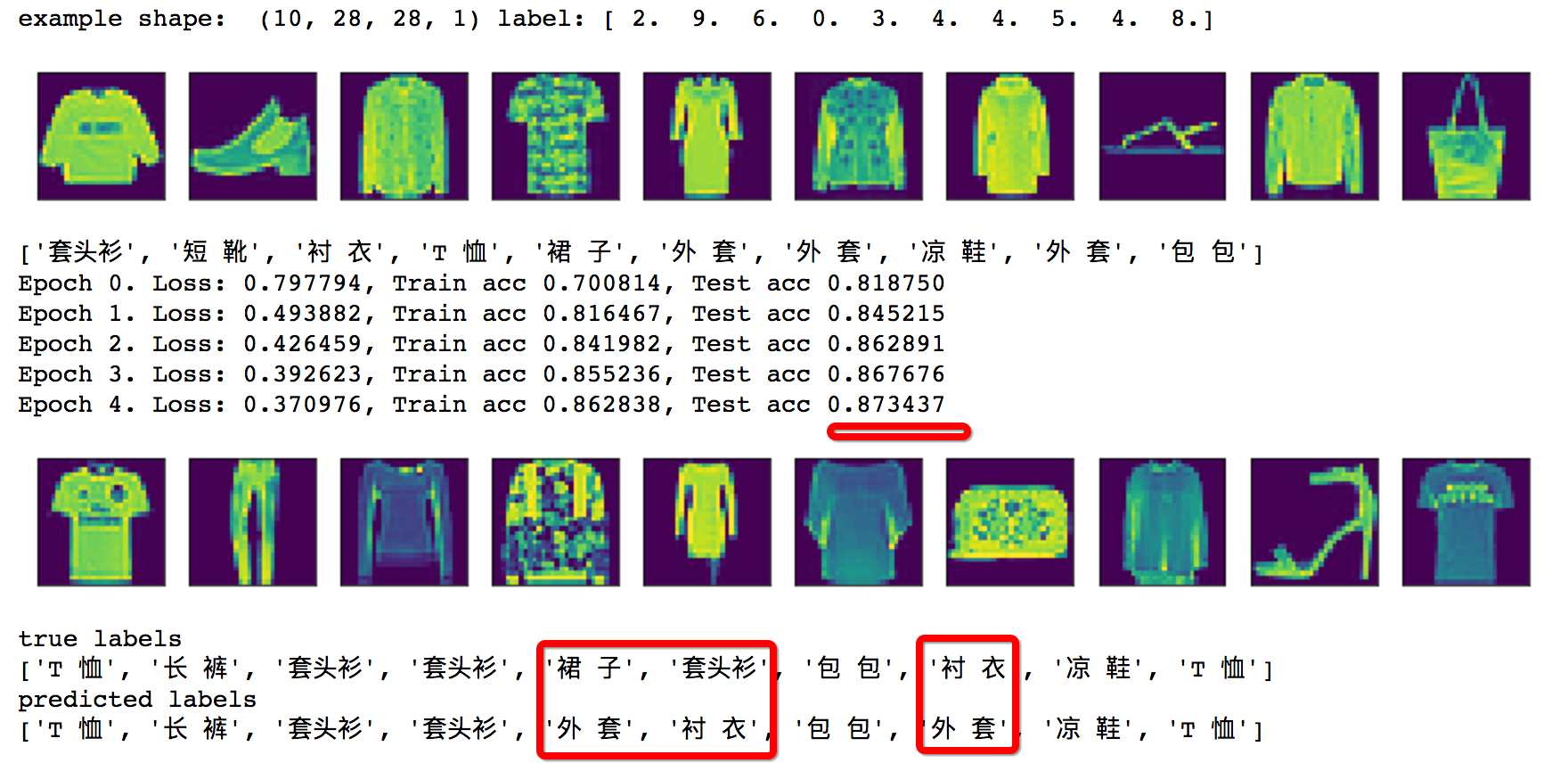

print("Epoch %d. Loss: %f, Train acc %f, Test acc %f" % (

epoch, train_loss / len(train_data), train_acc / len(train_data), test_acc))

data, label = mnist_test[0:10]

show_images(data)

print('true labels')

print(get_text_labels(label))

predicted_labels = net(data).argmax(axis=1)

print('predicted labels')

print(get_text_labels(predicted_labels.asnumpy()))

有變化的地方,都加了註釋,主要改動點有5個:

1. 手動新增了1個隱藏層,該層有256個節點

2. 多了一層,所以引數也變多了

3. 計算y=wx+b模型時,就要一層層來算了

4. 將softmax與交叉熵CrossEntropy合併了(這樣避免了單獨對softmax求導,理論上講更穩定些)

5. 另外啟用函式換成了收斂速度更快的relu(參考:Deep learning系列(七)啟用函式 )

執行效果:

相對原始純手動版本,準確率提升了不少!

tips:類似的思路,我們可以再手動新增第2層隱藏層,關鍵程式碼參考下面

...

#增加一層包含256個節點的隱藏層

num_hidden1 = 256

weight_scale1 = .01

#再增加一層包含512個節點的隱藏層

num_hidden2 = 512

weight_scale2 = .01

#輸入層的引數

W1 = nd.random_normal(shape=(num_inputs, num_hidden1), scale=weight_scale1)

b1 = nd.zeros(num_hidden1)

#隱藏層的引數

W2 = nd.random_normal(shape=(num_hidden1, num_hidden2), scale=weight_scale1)

b2 = nd.zeros(num_hidden2)

W3 = nd.random_normal(shape=(num_hidden2, num_outputs), scale=weight_scale2)

b3 = nd.zeros(num_outputs)

#引數變多了

params = [W1, b1, W2, b2, W3, b3]

...

#計算模型

def net(X):

X = X.reshape((-1, num_inputs))

#先計算到隱藏層的輸出

h1 = relu(nd.dot(X, W1) + b1)

h2 = relu(nd.dot(h1,W2) + b2)

#再利用隱藏層計算最終的輸出

output = nd.dot(h2, W3) + b3

return output