歡迎轉載,轉載請註明出處源自徽滬一郎。本文嘗試分析tuple傳送時的具體細節,本博的另一篇文章《bolt訊息傳遞路徑之原始碼解讀》主要從訊息接收方面來闡述問題,兩篇文章互為補充。

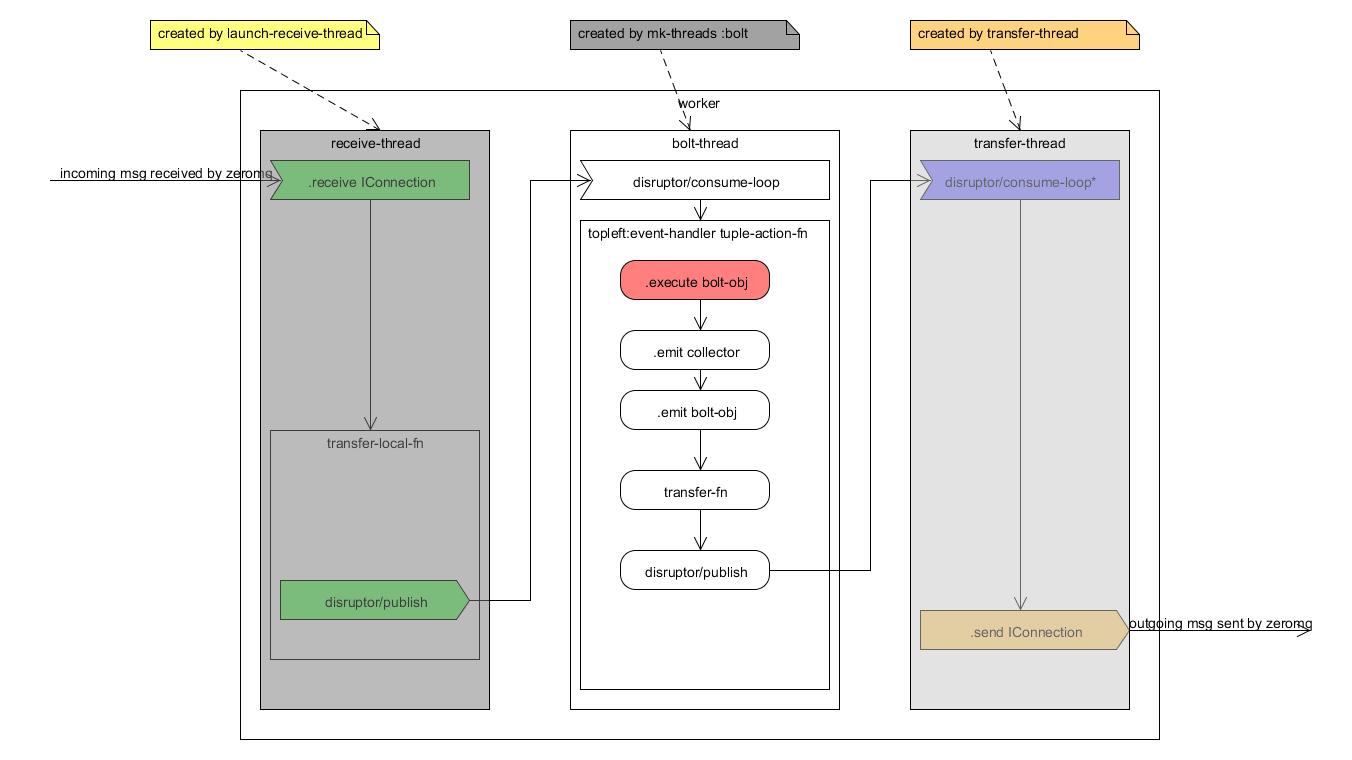

worker程式內訊息接收與處理全景圖

先上幅圖簡要勾勒出worker程式接收到tuple訊息之後的處理全過程

IConnection的建立與使用

話說在mk-threads :bolt函式的實現中有這麼一段程式碼,其主要功能是實現tuple的emit功能

bolt-emit (fn [stream anchors values task] (let [out-tasks (if task (tasks-fn task stream values) (tasks-fn stream values))] (fast-list-iter [t out-tasks] (let [anchors-to-ids (HashMap.)] (fast-list-iter [^TupleImpl a anchors] (let [root-ids (-> a .getMessageId .getAnchorsToIds .keySet)] (when (pos? (count root-ids)) (let [edge-id (MessageId/generateId rand)] (.updateAckVal a edge-id) (fast-list-iter [root-id root-ids] (put-xor! anchors-to-ids root-id edge-id)) )))) (transfer-fn t (TupleImpl. worker-context values task-id stream (MessageId/makeId anchors-to-ids))))) (or out-tasks [])))

加亮為藍色的部分實現的功能是另外傳送tuple,那麼transfer-fn函式的定義在哪呢?見mk-threads的let部分,能見到下述一行程式碼

:transfer-fn (mk-executor-transfer-fn batch-transfer->worker)

在繼續往下看每個函式實現之前,先確定一下這節程式碼閱讀的目的。storm線上程之間使用disruptor進行通訊,在程式之間進行訊息通訊使用的是zeromq或netty, 所以需要從transfer-fn追蹤到使用zeromq或netty api的位置。

再看mk-executor-transfer-fn函式實現

(defn mk-executor-transfer-fn [batch-transfer->worker] (fn this ([task tuple block? ^List overflow-buffer] (if (and overflow-buffer (not (.isEmpty overflow-buffer))) (.add overflow-buffer [task tuple]) (try-cause (disruptor/publish batch-transfer->worker [task tuple] block?) (catch InsufficientCapacityException e (if overflow-buffer (.add overflow-buffer [task tuple]) (throw e)) )))) ([task tuple overflow-buffer] (this task tuple (nil? overflow-buffer) overflow-buffer)) ([task tuple] (this task tuple nil) )))

disruptor/publish表示將訊息從本執行緒傳送出去,至於誰是該訊息的接收者,請繼續往下看。

worker程式中,有一個receiver-thread是用來專門接收來自外部程式的訊息,那麼與之相對的是有一個transfer-thread用來將本程式的訊息傳送給外部程式。所以剛才的disruptor/publish傳送出來的訊息應該被transfer-thread接收到。

在transfer-thread中,能找到這行下述一行程式碼

transfer-thread (disruptor/consume-loop* (:transfer-queue worker) transfer-tuples)

對於接收到來自本程式中其它執行緒傳送過來的訊息利用transfer-tuples進行處理,transfer-tuples使用mk-transfer-tuples-handler來建立,所以需要看看mk-transfer-tuples-handler能否與zeromq或netty聯絡上呢?

(defn mk-transfer-tuples-handler [worker] (let [^DisruptorQueue transfer-queue (:transfer-queue worker) drainer (ArrayList.) node+port->socket (:cached-node+port->socket worker) task->node+port (:cached-task->node+port worker) endpoint-socket-lock (:endpoint-socket-lock worker) ] (disruptor/clojure-handler (fn [packets _ batch-end?] (.addAll drainer packets) (when batch-end? (read-locked endpoint-socket-lock (let [node+port->socket @node+port->socket task->node+port @task->node+port] ;; consider doing some automatic batching here (would need to not be serialized at this point to remo ;; try using multipart messages ... first sort the tuples by the target node (without changing the lo 17 (fast-list-iter [[task ser-tuple] drainer] ;; TODO: consider write a batch of tuples here to every target worker ;; group by node+port, do multipart send (let [node-port (get task->node+port task)] (when node-port (.send ^IConnection (get node+port->socket node-port) task ser-tuple)) )))) (.clear drainer))))))

上述程式碼中出現了與zeromq可能有聯絡的部分了即加亮為紅色的一行。

那憑什麼說加亮的IConnection一行與zeromq有關係的,這話得慢慢說起,需要從配置檔案開始。

在storm.yaml中有這麼一行配置項,即

storm.messaging.transport: "backtype.storm.messaging.zmq"

這個配置項與worker中的mqcontext相對應,所以在worker中以mqcontext為線索,就能夠一步步找到IConnection的實現。connections在函式mk-refresh-connections中建立

refresh-connections (mk-refresh-connections worker)

mk-refresh-connection函式中與mq-context相關聯的一部分程式碼如下所示

(swap! (:cached-node+port->socket worker) #(HashMap. (merge (into {} %1) %2)) (into {} (dofor [endpoint-str new-connections :let [[node port] (string->endpoint endpoint-str)]] [endpoint-str (.connect ^IContext (:mq-context worker) storm-id ((:node->host assignment) node) port) ] )))

注意加亮部分,利用mq-conext中connect函式來建立IConnection. 當開啟zmq.clj時候,就能驗證我們的猜測。

(^IConnection connect [this ^String storm-id ^String host ^int port] (require 'backtype.storm.messaging.zmq) (-> context (mq/socket mq/push) (mq/set-hwm hwm) (mq/set-linger linger-ms) (mq/connect (get-connect-zmq-url local? host port)) mk-connection))

程式碼走到這裡,IConnection什麼時候建立起來的謎底就揭開了,訊息是如何從bolt或spout執行緒傳遞到transfer-thread,再由zeromq將tuple傳送給下跳的路徑打通了。

tuple的分發策略 grouping

從一個bolt中產生的tuple可以有多個bolt接收,到底傳送給哪一個bolt呢?這牽扯到分發策略問題,其實在twitter storm中有兩個層面的分發策略問題,一個是對於task level的,在講topology submit的時候已經涉及到。另一個就是現在要討論的針對tuple level的分發。

再次將視線拉回到bolt-emit中,這次將目光集中在變數t的前前後後。

(let [out-tasks (if task

(tasks-fn task stream values)

(tasks-fn stream values))]

(fast-list-iter [t out-tasks]

(let [anchors-to-ids (HashMap.)]

(fast-list-iter [^TupleImpl a anchors]

(let [root-ids (-> a .getMessageId .getAnchorsToIds .keySet)]

(when (pos? (count root-ids))

(let [edge-id (MessageId/generateId rand)]

(.updateAckVal a edge-id)

(fast-list-iter [root-id root-ids]

(put-xor! anchors-to-ids root-id edge-id))

))))

(transfer-fn t

(TupleImpl. worker-context

values

task-id

stream

(MessageId/makeId anchors-to-ids)))))

上述程式碼顯示t從out-tasks來,而out-tasks是tasks-fn的返回值

tasks-fn (:tasks-fn task-data)

一談tasks-fn,原來從未涉及的檔案task.clj這次被掛上了,task-data與由task/mk-task建立。將中間環節跳過,呼叫關係如下所列。

- mk-task

- mk-task-data

- mk-tasks-fn

tasks-fn中會使用到grouping,處理程式碼如下

fn ([^Integer out-task-id ^String stream ^List values] (when debug? (log-message "Emitting direct: " out-task-id "; " component-id " " stream " " values)) (let [target-component (.getComponentId worker-context out-task-id) component->grouping (get stream->component->grouper stream) grouping (get component->grouping target-component) out-task-id (if grouping out-task-id)] (when (and (not-nil? grouping) (not= :direct grouping)) (throw (IllegalArgumentException. "Cannot emitDirect to a task expecting a regular grouping"))) (apply-hooks user-context .emit (EmitInfo. values stream task-id [out-task-id])) (when (emit-sampler) (builtin-metrics/emitted-tuple! (:builtin-metrics task-data) executor-stats stream) (stats/emitted-tuple! executor-stats stream) (if out-task-id (stats/transferred-tuples! executor-stats stream 1) (builtin-metrics/transferred-tuple! (:builtin-metrics task-data) executor-stats stream 1))) (if out-task-id [out-task-id]) ))

而每個topology中的grouping策略又是如何被executor知道的呢,這從另一端executor-data說起。

在mk-executor-data中有下面一行程式碼

:stream->component->grouper (outbound-components worker-context component-id)

outbound-components的定義如下

(defn outbound-components "Returns map of stream id to component id to grouper" [^WorkerTopologyContext worker-context component-id] (->> (.getTargets worker-context component-id) clojurify-structure (map (fn [[stream-id component->grouping]] [stream-id (outbound-groupings worker-context component-id stream-id (.getComponentOutputFields worker-context component-id stream-id) component->grouping)])) (into {}) (HashMap.)))