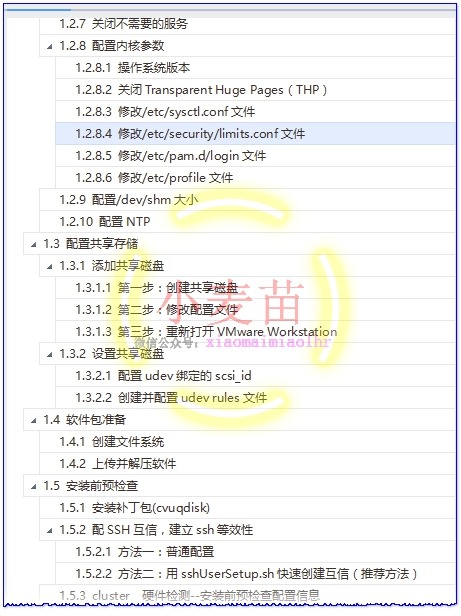

Oracle 12cR1 RAC 在VMware Workstation上安裝(上)—OS環境配置

Oracle 12cR1 RAC 在VMware Workstation上安裝(上)—OS環境配置

1.1 整體規劃部分

1.1.1 所需軟體介紹

Oracle RAC不支援異構平臺。在同一個叢集中,可以支援具有速度和規模不同的機器,但所有節點必須執行在相同的作業系統。Oracle RAC不支援具有不同的晶片架構的機器。

|

序號 |

型別 |

內容 |

|

1 |

資料庫 |

p17694377_121020_Linux-x86-64_1of8.zip p17694377_121020_Linux-x86-64_2of8.zip |

|

2 |

叢集軟體 |

p17694377_121020_Linux-x86-64_3of8.zip p17694377_121020_Linux-x86-64_4of8.zip |

|

3 |

作業系統 |

RHEL 6.5 2.6.32-431.el6.x86_64 硬體相容性:workstation 9.0 |

|

4 |

虛擬機器軟體 |

VMware Workstation 12 Pro 12.5.2 build-4638234 |

|

5 |

Xmanager Enterprise 4 |

Xmanager Enterprise 4,用於開啟圖形介面 |

|

6 |

rlwrap-0.36 |

rlwrap-0.36,用於記錄sqlplus、rman等命令的歷史記錄 |

|

7 |

SecureCRTPortable.exe |

Version 7.0.0 (build 326),帶有SecureCRT和SecureFX,用於SSH連線 |

注:這些軟體小麥苗已上傳到騰訊微雲(http://blog.itpub.net/26736162/viewspace-1624453/),各位朋友可以去下載。另外,小麥苗已經將安裝好的虛擬機器上傳到了雲盤,裡邊已整合了rlwrap軟體。

1.1.2 IP地址規劃

從Oracle 11g開始,共7個IP地址,2塊網路卡,其中public、vip和scan都在同一個網段,private在另一個網段,主機名不要包含下橫線,如:RAC_01是不允許的;透過執行ifconfig -a檢查2個節點的網路裝置名字是否一致。另外,在安裝之前,公網、私網共4個IP可以ping通,其它3個不能ping通才是正常的。

|

節點/主機名 |

Interface Name |

地址型別 |

IP Address |

註冊位置 |

|

raclhr-12cR1-N1 |

raclhr-12cR1-N1 |

Public |

192.168.59.160 |

/etc/hosts |

|

raclhr-12cR1-N1 |

raclhr-12cR1-N1-vip |

Virtual |

192.168.59.162 |

/etc/hosts |

|

raclhr-12cR1-N1 |

raclhr-12cR1-N1-priv |

Private |

192.168.2.100 |

/etc/hosts |

|

raclhr-12cR1-N2 |

raclhr-12cR1-N2 |

Public |

192.168.59.161 |

/etc/hosts |

|

raclhr-12cR1-N2 |

raclhr-12cR1-N2-vip |

Virtual |

192.168.59.163 |

/etc/hosts |

|

raclhr-12cR1-N2 |

raclhr-12cR1-N2-priv |

Private |

192.168.2.101 |

/etc/hosts |

|

|

raclhr-12cR1-scan |

SCAN |

192.168.59.164 |

/etc/hosts |

1.1.3 作業系統本地磁碟分割槽規劃

除了/boot分割槽外,其它分割槽均採用邏輯卷的方式,這樣有利於檔案系統的擴充套件。

|

序號 |

分割槽名稱 |

大小 |

邏輯卷 |

用途說明 |

|

1 |

/boot |

200MB |

/dev/sda1 |

引導分割槽 |

|

2 |

/ |

10G |

/dev/mapper/vg_rootlhr-Vol00 |

根分割槽 |

|

3 |

swap |

2G |

/dev/mapper/vg_rootlhr-Vol02 |

交換分割槽 |

|

4 |

/tmp |

3G |

/dev/mapper/vg_rootlhr-Vol01 |

臨時空間 |

|

5 |

/home |

3G |

/dev/mapper/vg_rootlhr-Vol03 |

所有使用者的home目錄 |

|

6 |

/u01 |

20G |

/dev/mapper/vg_orasoft-lv_orasoft_u01 |

oracle和grid的安裝目錄 |

|

[root@raclhr-12cR1-N1 ~]# fdisk -l | grep dev Disk /dev/sda: 21.5 GB, 21474836480 bytes /dev/sda1 * 1 26 204800 83 Linux /dev/sda2 26 1332 10485760 8e Linux LVM /dev/sda3 1332 2611 10279936 8e Linux LVM Disk /dev/sdb: 107.4 GB, 107374182400 bytes /dev/sdb1 1 1306 10485760 8e Linux LVM /dev/sdb2 1306 2611 10485760 8e Linux LVM /dev/sdb3 2611 3917 10485760 8e Linux LVM /dev/sdb4 3917 13055 73399296 5 Extended /dev/sdb5 3917 5222 10485760 8e Linux LVM /dev/sdb6 5223 6528 10485760 8e Linux LVM /dev/sdb7 6528 7834 10485760 8e Linux LVM /dev/sdb8 7834 9139 10485760 8e Linux LVM /dev/sdb9 9139 10445 10485760 8e Linux LVM /dev/sdb10 10445 11750 10485760 8e Linux LVM /dev/sdb11 11750 13055 10477568 8e Linux LVM

|

1.1.4 共享儲存與ASM磁碟組規劃

|

序號 |

磁碟名稱 |

ASM磁碟名稱 |

磁碟組名稱 |

大小 |

用途 |

|

1 |

sdc1 |

asm-diskc |

OCR |

6G |

OCR+VOTINGDISK |

|

2 |

sdd1 |

asm_diskd |

DATA |

10G |

data |

|

3 |

sde1 |

asm_diske |

FRA |

10G |

快速恢復區 |

注意,12c R1的OCR磁碟組最少需要5501MB磁碟空間。

1.2 作業系統配置部分

1.2.1 安裝主機或虛擬機器

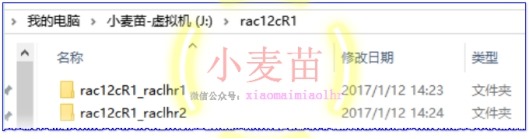

安裝步驟略。安裝一臺虛擬機器,然後複製改名,如下:

也可以下載小麥苗已經安裝好的虛擬機器環境。

1.2.2 修改主機名

修改2個節點的主機名為raclhr-12cR1-N1和raclhr-12cR1-N2:

|

vi /etc/sysconfig/network HOSTNAME=raclhr-12cR1-N1 hostname raclhr-12cR1-N1

|

1.2.3 網路配置

1.2.3.1 新增虛擬網路卡

新增2塊網路卡,VMnet8為公有網路卡,VMnet2位私有網路卡,如下所示:

1.2.3.2 配置IP地址

|

chkconfig NetworkManager off chkconfig network on service NetworkManager stop service network start |

在2個節點上分別執行如下的操作,在節點2上配置IP的時候注意將IP地址修改掉。

第一步,配置公網和私網的IP地址:

配置公網:vi /etc/sysconfig/network-scripts/ifcfg-eth0

|

DEVICE=eth0 IPADDR=192.168.59.160 NETMASK=255.255.255.0 NETWORK=192.168.59.0 BROADCAST=192.168.59.255 GATEWAY=192.168.59.2 ONBOOT=yes USERCTL=no BOOTPROTO=static TYPE=Ethernet IPV6INIT=no |

配置私網:vi /etc/sysconfig/network-scripts/ifcfg-eth1

|

DEVICE=eth1 IPADDR=192.168.2.100 NETMASK=255.255.255.0 NETWORK=192.168.2.0 BROADCAST=192.168.2.255 GATEWAY=192.168.2.1 ONBOOT=yes USERCTL=no BOOTPROTO=static TYPE=Ethernet IPV6INIT=no |

第二步,將/etc/udev/rules.d/70-persistent-net.rules中的內容清空,

第三步,重啟主機。

|

[root@raclhr-12cR1-N1 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000 link/ether 00:0c:29:d9:43:a7 brd ff:ff:ff:ff:ff:ff inet 192.168.59.160/24 brd 192.168.59.255 scope global eth0 inet6 fe80::20c:29ff:fed9:43a7/64 scope link valid_lft forever preferred_lft forever 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN qlen 1000 link/ether 00:0c:29:d9:43:b1 brd ff:ff:ff:ff:ff:ff inet 192.168.2.100/24 brd 192.168.2.255 scope global eth1 inet6 fe80::20c:29ff:fed9:43b1/64 scope link valid_lft forever preferred_lft forever 4: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN link/ether 52:54:00:68:da:bb brd ff:ff:ff:ff:ff:ff inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0 5: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 500 link/ether 52:54:00:68:da:bb brd ff:ff:ff:ff:ff:ff

|

1.2.3.3 關閉防火牆

在2個節點上分別執行如下語句:

|

service iptables stop service ip6tables stop chkconfig iptables off chkconfig ip6tables off

chkconfig iptables --list |

chkconfig iptables off ---永久

service iptables stop ---臨時

/etc/init.d/iptables status ----會得到一系列資訊,說明防火牆開著。

/etc/rc.d/init.d/iptables stop ----------關閉防火牆

|

LANG=en_US setup ----------圖形介面 |

1.2.3.4 禁用selinux

修改/etc/selinux/config

編輯文字中的SELINUX=enforcing為SELINUX=disabled

|

[root@raclhr-12cR1-N1 ~]# more /etc/selinux/config

# This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of these two values: # targeted - Targeted processes are protected, # mls - Multi Level Security protection. SELINUXTYPE=targeted [root@raclhr-12cR1-N1 ~]# 臨時關閉(不用重啟機器): setenforce 0

|

檢視SELinux狀態:

1、/usr/sbin/sestatus -v ##如果SELinux status引數為enabled即為開啟狀態

SELinux status: enabled

2、getenforce ##也可以用這個命令檢查

|

[root@raclhr-12cR1-N1 ~] /usr/sbin/sestatus -v SELinux status: disabled [root@raclhr-12cR1-N1 ~] getenforce Disabled [root@raclhr-12cR1-N1 ~]

|

1.2.3.5 修改/etc/hosts檔案

2個節點均配置相同,如下:

|

[root@raclhr-12cR1-N2 ~]# more /etc/hosts # Do not remove the following line, or various programs # that require network functionality will fail. 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#Public IP 192.168.59.160 raclhr-12cR1-N1 192.168.59.161 raclhr-12cR1-N2

#Private IP 192.168.2.100 raclhr-12cR1-N1-priv 192.168.2.101 raclhr-12cR1-N2-priv

#Virtual IP 192.168.59.162 raclhr-12cR1-N1-vip 192.168.59.163 raclhr-12cR1-N2-vip

#Scan IP 192.168.59.164 raclhr-12cR1-scan [root@raclhr-12cR1-N2 ~]# [root@raclhr-12cR1-N1 ~]# ping raclhr-12cR1-N1 PING raclhr-12cR1-N1 (192.168.59.160) 56(84) bytes of data. 64 bytes from raclhr-12cR1-N1 (192.168.59.160): icmp_seq=1 ttl=64 time=0.018 ms 64 bytes from raclhr-12cR1-N1 (192.168.59.160): icmp_seq=2 ttl=64 time=0.052 ms ^C --- raclhr-12cR1-N1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1573ms rtt min/avg/max/mdev = 0.018/0.035/0.052/0.017 ms [root@raclhr-12cR1-N1 ~]# ping raclhr-12cR1-N2 PING raclhr-12cR1-N2 (192.168.59.161) 56(84) bytes of data. 64 bytes from raclhr-12cR1-N2 (192.168.59.161): icmp_seq=1 ttl=64 time=1.07 ms 64 bytes from raclhr-12cR1-N2 (192.168.59.161): icmp_seq=2 ttl=64 time=0.674 ms ^C --- raclhr-12cR1-N2 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1543ms rtt min/avg/max/mdev = 0.674/0.876/1.079/0.204 ms [root@raclhr-12cR1-N1 ~]# ping raclhr-12cR1-N1-priv PING raclhr-12cR1-N1-priv (192.168.2.100) 56(84) bytes of data. 64 bytes from raclhr-12cR1-N1-priv (192.168.2.100): icmp_seq=1 ttl=64 time=0.015 ms 64 bytes from raclhr-12cR1-N1-priv (192.168.2.100): icmp_seq=2 ttl=64 time=0.056 ms ^C --- raclhr-12cR1-N1-priv ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1297ms rtt min/avg/max/mdev = 0.015/0.035/0.056/0.021 ms [root@raclhr-12cR1-N1 ~]# ping raclhr-12cR1-N2-priv PING raclhr-12cR1-N2-priv (192.168.2.101) 56(84) bytes of data. 64 bytes from raclhr-12cR1-N2-priv (192.168.2.101): icmp_seq=1 ttl=64 time=1.10 ms 64 bytes from raclhr-12cR1-N2-priv (192.168.2.101): icmp_seq=2 ttl=64 time=0.364 ms ^C --- raclhr-12cR1-N2-priv ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1421ms rtt min/avg/max/mdev = 0.364/0.733/1.102/0.369 ms [root@raclhr-12cR1-N1 ~]# ping raclhr-12cR1-N1-vip PING raclhr-12cR1-N1-vip (192.168.59.162) 56(84) bytes of data. From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=2 Destination Host Unreachable From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=3 Destination Host Unreachable From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=4 Destination Host Unreachable ^C --- raclhr-12cR1-N1-vip ping statistics --- 4 packets transmitted, 0 received, +3 errors, 100% packet loss, time 3901ms pipe 3 [root@raclhr-12cR1-N1 ~]# ping raclhr-12cR1-N2-vip PING raclhr-12cR1-N2-vip (192.168.59.163) 56(84) bytes of data. From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=1 Destination Host Unreachable From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=2 Destination Host Unreachable From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=3 Destination Host Unreachable ^C --- raclhr-12cR1-N2-vip ping statistics --- 5 packets transmitted, 0 received, +3 errors, 100% packet loss, time 4026ms pipe 3 [root@raclhr-12cR1-N1 ~]# ping raclhr-12cR1-scan PING raclhr-12cR1-scan (192.168.59.164) 56(84) bytes of data. From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=2 Destination Host Unreachable From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=3 Destination Host Unreachable From raclhr-12cR1-N1 (192.168.59.160) icmp_seq=4 Destination Host Unreachable ^C --- raclhr-12cR1-scan ping statistics --- 5 packets transmitted, 0 received, +3 errors, 100% packet loss, time 4501ms pipe 3 [root@raclhr-12cR1-N1 ~]#

|

1.2.3.6

vi /etc/sysconfig/network增加以下內容

|

NOZEROCONF=yes |

1.2.4 硬體要求

1.2.4.1

使用命令檢視:# grep MemTotal /proc/meminfo

|

[root@raclhr-12cR1-N1 ~]# grep MemTotal /proc/meminfo MemTotal: 2046592 kB [root@raclhr-12cR1-N1 ~]# |

1.2.4.2

|

RAM |

Swap 空間 |

|

1 GB ~ 2 GB |

1.5倍RAM大小 |

|

2 GB ~ 16 GB |

RAM大小 |

|

> 32 GB |

16 GB |

使用命令檢視:# grep SwapTotal /proc/meminfo

|

[root@raclhr-12cR1-N1 ~]# grep SwapTotal /proc/meminfo SwapTotal: 2097144 kB [root@raclhr-12cR1-N1 ~]# |

1.2.4.3

建議單獨建立/tmp檔案系統,小麥苗這裡用的是邏輯卷,所以比較好擴充套件。

|

[root@raclhr-12cR1-N1 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.6G 52% / tmpfs 1000M 72K 1000M 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 70M 2.8G 3% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home .host:/ 331G 229G 102G 70% /mnt/hgfs |

1.2.4.4 racle安裝將佔用的磁碟空間

本地磁碟:/u01作為下列軟體的安裝位置

ü Oracle Grid Infrastructure software: 6.8GB

ü Oracle Enterprise Edition software: 5.3GB

|

vgcreate vg_orasoft /dev/sdb1 /dev/sdb2 /dev/sdb3 lvcreate -n lv_orasoft_u01 -L 20G vg_orasoft mkfs.ext4 /dev/vg_orasoft/lv_orasoft_u01 mkdir /u01 mount /dev/vg_orasoft/lv_orasoft_u01 /u01

cp /etc/fstab /etc/fstab.`date +%Y%m%d` echo "/dev/vg_orasoft/lv_orasoft_u01 /u01 ext4 defaults 0 0" >> /etc/fstab cat /etc/fstab

|

|

[root@raclhr-12cR1-N2 ~]# vgcreate vg_orasoft /dev/sdb1 /dev/sdb2 /dev/sdb3 Volume group "vg_orasoft" successfully created [root@raclhr-12cR1-N2 ~]# lvcreate -n lv_orasoft_u01 -L 20G vg_orasoft Logical volume "lv_orasoft_u01" created [root@raclhr-12cR1-N2 ~]# mkfs.ext4 /dev/vg_orasoft/lv_orasoft_u01 mke2fs 1.41.12 (17-May-2010) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) Stride=0 blocks, Stripe width=0 blocks 1310720 inodes, 5242880 blocks 262144 blocks (5.00%) reserved for the super user First data block=0 Maximum filesystem blocks=4294967296 160 block groups 32768 blocks per group, 32768 fragments per group 8192 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000

Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 39 mounts or 180 days, whichever comes first. Use tune2fs -c or -i to override. [root@raclhr-12cR1-N2 ~]# mkdir /u01 mount /dev/vg_orasoft/lv_orasoft_u01 /u01 [root@raclhr-12cR1-N2 ~]# mount /dev/vg_orasoft/lv_orasoft_u01 /u01 [root@raclhr-12cR1-N2 ~]# cp /etc/fstab /etc/fstab.`date +%Y%m%d` echo "/dev/vg_orasoft/lv_orasoft_u01 /u01 ext4 defaults 0 0" >> /etc/fstab [root@raclhr-12cR1-N2 ~]# echo "/dev/vg_orasoft/lv_orasoft_u01 /u01 ext4 defaults 0 0" >> /etc/fstab [root@raclhr-12cR1-N2 ~]# cat /etc/fstab # # /etc/fstab # Created by anaconda on Sat Jan 14 18:56:24 2017 # # Accessible filesystems, by reference, are maintained under '/dev/disk' # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info # /dev/mapper/vg_rootlhr-Vol00 / ext4 defaults 1 1 UUID=fccf51c1-2d2f-4152-baac-99ead8cfbc1a /boot ext4 defaults 1 2 /dev/mapper/vg_rootlhr-Vol01 /tmp ext4 defaults 1 2 /dev/mapper/vg_rootlhr-Vol02 swap swap defaults 0 0 tmpfs /dev/shm tmpfs defaults 0 0 devpts /dev/pts devpts gid=5,mode=620 0 0 sysfs /sys sysfs defaults 0 0 proc /proc proc defaults 0 0 /dev/vg_rootlhr/Vol03 /home ext4 defaults 0 0 /dev/vg_orasoft/lv_orasoft_u01 /u01 ext4 defaults 0 0 [root@raclhr-12cR1-N2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.6G 52% / tmpfs 1000M 72K 1000M 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 70M 2.8G 3% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home .host:/ 331G 234G 97G 71% /mnt/hgfs /dev/mapper/vg_orasoft-lv_orasoft_u01 20G 172M 19G 1% /u01 [root@raclhr-12cR1-N2 ~]# vgs VG #PV #LV #SN Attr VSize VFree vg_orasoft 3 1 0 wz--n- 29.99g 9.99g vg_rootlhr 2 4 0 wz--n- 19.80g 1.80g [root@raclhr-12cR1-N2 ~]# lvs LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert lv_orasoft_u01 vg_orasoft -wi-ao---- 20.00g Vol00 vg_rootlhr -wi-ao---- 10.00g Vol01 vg_rootlhr -wi-ao---- 3.00g Vol02 vg_rootlhr -wi-ao---- 2.00g Vol03 vg_rootlhr -wi-ao---- 3.00g [root@raclhr-12cR1-N2 ~]# pvs PV VG Fmt Attr PSize PFree /dev/sda2 vg_rootlhr lvm2 a-- 10.00g 0 /dev/sda3 vg_rootlhr lvm2 a-- 9.80g 1.80g /dev/sdb1 vg_orasoft lvm2 a-- 10.00g 0 /dev/sdb10 lvm2 a-- 10.00g 10.00g /dev/sdb11 lvm2 a-- 9.99g 9.99g /dev/sdb2 vg_orasoft lvm2 a-- 10.00g 0 /dev/sdb3 vg_orasoft lvm2 a-- 10.00g 9.99g /dev/sdb5 lvm2 a-- 10.00g 10.00g /dev/sdb6 lvm2 a-- 10.00g 10.00g /dev/sdb7 lvm2 a-- 10.00g 10.00g /dev/sdb8 lvm2 a-- 10.00g 10.00g /dev/sdb9 lvm2 a-- 10.00g 10.00g [root@raclhr-12cR1-N2 ~]#

|

1.2.5 新增組和使用者

1.2.5.1 新增oracle和grid使用者

從Oracle 11gR2開始,安裝RAC需要安裝 Oracle Grid Infrastructure 軟體、Oracle資料庫軟體,其中Grid軟體等同於Oracle 10g的Clusterware叢集件。Oracle建議以不同的使用者分別安裝Grid Infrastructure軟體、Oracle資料庫軟體。一般以grid使用者安裝Grid Infrastructure,oracle使用者安裝Oracle資料庫軟體。grid、oracle使用者需要屬於不同的使用者組。在配置RAC時,還要求這兩個使用者在RAC的不同節點上uid、gid要一致。

ü 建立5個組dba,oinstall分別做為OSDBA組,Oracle Inventory組;asmdba,asmoper,asmadmin作為ASM磁碟管理組。

ü 建立2個使用者oracle, grid,oracle屬於dba,oinstall,oraoper,asmdba組,grid屬於asmadmin,asmdba,asmoper,oraoper,dba;oinstall做為使用者的primary group。

ü 上述建立的所有使用者和組在每臺機器上的名稱和對應ID號,口令,以及屬組關係和順序必須保持一致。grid和oracle密碼不過期。

建立組:

|

groupadd -g 1000 oinstall groupadd -g 1001 dba groupadd -g 1002 oper groupadd -g 1003 asmadmin groupadd -g 1004 asmdba groupadd -g 1005 asmoper

|

建立grid和oracle使用者:

|

useradd -u 1000 -g oinstall -G asmadmin,asmdba,asmoper,dba -d /home/grid -m grid useradd -u 1001 -g oinstall -G dba,asmdba -d /home/oracle -m oracle |

如果oracle使用者已經存在,則:

|

usermod -g oinstall -G dba,asmdba –u 1001 oracle |

為oracle和grid使用者設密碼:

|

passwd oracle passwd grid |

設定密碼永不過期:

|

chage -M -1 oracle chage -M -1 grid chage -l oracle chage -l grid |

檢查:

|

[root@raclhr-12cR1-N1 ~]# id grid uid=1000(grid) gid=1000(oinstall) groups=1000(oinstall),1001(dba),1003(asmadmin),1004(asmdba),1005(asmoper) [root@raclhr-12cR1-N1 ~]# id oracle uid=1001(oracle) gid=1000(oinstall) groups=1000(oinstall),1001(dba),1004(asmdba) [root@raclhr-12cR1-N1 ~]# |

1.2.5.2 建立安裝目錄

? GRID 軟體的 ORACLE_HOME 不能是 ORACLE_BASE 的子目錄

--在2個節點均建立,root使用者下建立目錄:

|

mkdir -p /u01/app/oracle mkdir -p /u01/app/grid mkdir -p /u01/app/12.1.0/grid mkdir -p /u01/app/oracle/product/12.1.0/dbhome_1 chown -R grid:oinstall /u01/app/grid chown -R grid:oinstall /u01/app/12.1.0 chown -R oracle:oinstall /u01/app/oracle chmod -R 775 /u01

mkdir -p /u01/app/oraInventory chown -R grid:oinstall /u01/app/oraInventory chmod -R 775 /u01/app/oraInventory |

1.2.5.3 配置grid和oracle使用者的環境變數檔案

修改gird、oracle使用者的.bash_profile檔案,以oracle賬號登陸,編輯.bash_profile

或者在root直接編輯:

vi /home/oracle/.bash_profile

vi /home/grid/.bash_profile

Oracle使用者:

|

umask 022 export ORACLE_SID=lhrrac1 export ORACLE_BASE=/u01/app/oracle export ORACLE_HOME=$ORACLE_BASE/product/12.1.0/dbhome_1 export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib export NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS" export TMP=/tmp export TMPDIR=$TMP export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

export EDITOR=vi export TNS_ADMIN=$ORACLE_HOME/network/admin export ORACLE_PATH=.:$ORACLE_BASE/dba_scripts/sql:$ORACLE_HOME/rdbms/admin export SQLPATH=$ORACLE_HOME/sqlplus/admin

#export NLS_LANG="SIMPLIFIED CHINESE_CHINA.ZHS16GBK" --AL32UTF8 SELECT userenv('LANGUAGE') db_NLS_LANG FROM DUAL; export NLS_LANG="AMERICAN_CHINA.ZHS16GBK"

alias sqlplus='rlwrap sqlplus' alias rman='rlwrap rman' alias asmcmd='rlwrap asmcmd'

|

grid使用者:

|

umask 022 export ORACLE_SID=+ASM1 export ORACLE_BASE=/u01/app/grid export ORACLE_HOME=/u01/app/12.1.0/grid export LD_LIBRARY_PATH=$ORACLE_HOME/lib export NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS" export PATH=$ORACLE_HOME/bin:$PATH alias sqlplus='rlwrap sqlplus' alias asmcmd='rlwrap asmcmd'

|

注意:另外一臺資料庫例項名須做相應修改:

Oracle:export ORACLE_SID=lhrrac2

grid:export ORACLE_SID=+ASM2

1.2.5.4 配置root使用者的環境變數

vi /etc/profile

|

export ORACLE_HOME=/u01/app/12.1.0/grid export PATH=$PATH:$ORACLE_HOME/bin |

1.2.6 軟體包的檢查

對於Oracle Linux 6和Red Hat Enterprise Linux 6需要安裝以下的包,其它版本或OS請參考官方文件(Database Installation Guide)

The following packages (or later versions) must be installed:

|

binutils-2.20.51.0.2-5.11.el6 (x86_64) compat-libcap1-1.10-1 (x86_64) compat-libstdc++-33-3.2.3-69.el6 (x86_64) compat-libstdc++-33-3.2.3-69.el6 (i686) gcc-4.4.4-13.el6 (x86_64) gcc-c++-4.4.4-13.el6 (x86_64) glibc-2.12-1.7.el6 (i686) glibc-2.12-1.7.el6 (x86_64) glibc-devel-2.12-1.7.el6 (x86_64) glibc-devel-2.12-1.7.el6 (i686) ksh libgcc-4.4.4-13.el6 (i686) libgcc-4.4.4-13.el6 (x86_64) libstdc++-4.4.4-13.el6 (x86_64) libstdc++-4.4.4-13.el6 (i686) libstdc++-devel-4.4.4-13.el6 (x86_64) libstdc++-devel-4.4.4-13.el6 (i686) libaio-0.3.107-10.el6 (x86_64) libaio-0.3.107-10.el6 (i686) libaio-devel-0.3.107-10.el6 (x86_64) libaio-devel-0.3.107-10.el6 (i686) libXext-1.1 (x86_64) libXext-1.1 (i686) libXtst-1.0.99.2 (x86_64) libXtst-1.0.99.2 (i686) libX11-1.3 (x86_64) libX11-1.3 (i686) libXau-1.0.5 (x86_64) libXau-1.0.5 (i686) libxcb-1.5 (x86_64) libxcb-1.5 (i686) libXi-1.3 (x86_64) libXi-1.3 (i686) make-3.81-19.el6 sysstat-9.0.4-11.el6 (x86_64) |

檢查命令:

|

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' binutils \ compat-libcap1 \ compat-libstdc++ \ gcc \ gcc-c++ \ glibc \ glibc-devel \ ksh \ libgcc \ libstdc++ \ libstdc++-devel \ libaio \ libaio-devel \ libXext \ libXtst \ libX11 \ libXau \ libxcb \ libXi \ make \ sysstat |

執行檢查:

|

[root@raclhr-12cR1-N1 ~]# rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' binutils \ > compat-libcap1 \ > compat-libstdc++ \ > gcc \ > gcc-c++ \ > glibc \ > glibc-devel \ > ksh \ > libgcc \ > libstdc++ \ > libstdc++-devel \ > libaio \ > libaio-devel \ > libXext \ > libXtst \ > libX11 \ > libXau \ > libxcb \ > libXi \ > make \ > sysstat binutils-2.20.51.0.2-5.36.el6 (x86_64) compat-libcap1-1.10-1 (x86_64) package compat-libstdc++ is not installed gcc-4.4.7-4.el6 (x86_64) gcc-c++-4.4.7-4.el6 (x86_64) glibc-2.12-1.132.el6 (x86_64) glibc-2.12-1.132.el6 (i686) glibc-devel-2.12-1.132.el6 (x86_64) package ksh is not installed libgcc-4.4.7-4.el6 (x86_64) libgcc-4.4.7-4.el6 (i686) libstdc++-4.4.7-4.el6 (x86_64) libstdc++-devel-4.4.7-4.el6 (x86_64) libaio-0.3.107-10.el6 (x86_64) package libaio-devel is not installed libXext-1.3.1-2.el6 (x86_64) libXtst-1.2.1-2.el6 (x86_64) libX11-1.5.0-4.el6 (x86_64) libXau-1.0.6-4.el6 (x86_64) libxcb-1.8.1-1.el6 (x86_64) libXi-1.6.1-3.el6 (x86_64) make-3.81-20.el6 (x86_64) sysstat-9.0.4-22.el6 (x86_64) |

1.2.6.1 配置本地yum源

|

[root@raclhr-12cR1-N1 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.5G 52% / tmpfs 1000M 72K 1000M 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 70M 2.8G 3% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home .host:/ 331G 234G 97G 71% /mnt/hgfs /dev/mapper/vg_orasoft-lv_orasoft_u01 20G 172M 19G 1% /u01 |

|

[root@raclhr-12cR1-N1 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.5G 52% / tmpfs 1000M 76K 1000M 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 70M 2.8G 3% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home .host:/ 331G 234G 97G 71% /mnt/hgfs /dev/mapper/vg_orasoft-lv_orasoft_u01 20G 172M 19G 1% /u01 /dev/sr0 3.6G 3.6G 0 100% /media/RHEL_6.5 x86_64 Disc 1 [root@raclhr-12cR1-N1 ~]# mkdir -p /media/lhr/cdrom [root@raclhr-12cR1-N1 ~]# mount /dev/sr0 /media/lhr/cdrom/ mount: block device /dev/sr0 is write-protected, mounting read-only [root@raclhr-12cR1-N1 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.5G 52% / tmpfs 1000M 76K 1000M 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 70M 2.8G 3% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home .host:/ 331G 234G 97G 71% /mnt/hgfs /dev/mapper/vg_orasoft-lv_orasoft_u01 20G 172M 19G 1% /u01 /dev/sr0 3.6G 3.6G 0 100% /media/RHEL_6.5 x86_64 Disc 1 /dev/sr0 3.6G 3.6G 0 100% /media/lhr/cdrom [root@raclhr-12cR1-N1 ~]# cd /etc/yum.repos.d/ [root@raclhr-12cR1-N1 yum.repos.d]# cp rhel-media.repo rhel-media.repo.bk [root@raclhr-12cR1-N1 yum.repos.d]# more rhel-media.repo [rhel-media] name=Red Hat Enterprise Linux 6.5 baseurl=file:///media/cdrom enabled=1 gpgcheck=1 gpgkey=file:///media/cdrom/RPM-GPG-KEY-redhat-release [root@raclhr-12cR1-N1 yum.repos.d]#

|

配置本地yum源,也可以將整個光碟的內容複製到本地裡,然後如下配置:

|

rpm -ivh deltarpm-3.5-0.5.20090913git.el6.x86_64.rpm rpm -ivh python-deltarpm-3.5-0.5.20090913git.el6.x86_64.rpm rpm -ivh createrepo-0.9.9-18.el6.noarch.rpm createrepo .

|

小麥苗直接使用了光碟,並沒有配置這個。

1.2.6.2 安裝缺失的包

|

yum install compat-libstdc++* yum install libaio-devel* yum install ksh* |

最後重新檢查,確保所有的包已安裝。需要注意的是,有的時候由於版本的問題導致檢查有問題,所以需要用rpm -qa | grep libstdc 來檢查。

1.2.7 關閉不需要的服務

|

chkconfig autofs off chkconfig acpid off chkconfig sendmail off chkconfig cups-config-daemon off chkconfig cpus off chkconfig xfs off chkconfig lm_sensors off chkconfig gpm off chkconfig openibd off chkconfig pcmcia off chkconfig cpuspeed off chkconfig nfslock off chkconfig ip6tables off chkconfig rpcidmapd off chkconfig apmd off chkconfig sendmail off chkconfig arptables_jf off chkconfig microcode_ctl off chkconfig rpcgssd off chkconfig ntpd off |

1.2.8 配置核心引數

1.2.8.1 作業系統版本

/usr/bin/lsb_release -a

|

[root@raclhr-12cR1-N1 ~]# lsb_release -a LSB Version: :base-4.0-amd64:base-4.0-noarch:core-4.0-amd64:core-4.0-noarch:graphics-4.0-amd64:graphics-4.0-noarch:printing-4.0-amd64:printing-4.0-noarch Distributor ID: RedHatEnterpriseServer Description: Red Hat Enterprise Linux Server release 6.5 (Santiago) Release: 6.5 Codename: Santiago [root@raclhr-12cR1-N1 ~]# uname -a Linux raclhr-12cR1-N1 2.6.32-431.el6.x86_64 #1 SMP Sun Nov 10 22:19:54 EST 2013 x86_64 x86_64 x86_64 GNU/Linux [root@raclhr-12cR1-N1 ~]# [root@raclhr-12cR1-N1 ~]# cat /proc/version Linux version 2.6.32-431.el6.x86_64 (mockbuild@x86-023.build.eng.bos.redhat.com) (gcc version 4.4.7 20120313 (Red Hat 4.4.7-4) (GCC) ) #1 SMP Sun Nov 10 22:19:54 EST 2013 [root@raclhr-12cR1-N1 ~]# |

1.2.8.2 關閉Transparent Huge Pages(THP)

(1) 檢視驗證transparent_hugepage的狀態

|

cat /sys/kernel/mm/redhat_transparent_hugepage/enabled |

always madvise [never] 結果為never表示關閉

(2) 關閉transparent_hugepage的配置

#vi /etc/rc.local #註釋:編輯rc.local檔案,增加以下內容

|

if test -f /sys/kernel/mm/redhat_transparent_hugepage/enabled; then echo never > /sys/kernel/mm/redhat_transparent_hugepage/enabled fi |

1.2.8.3 修改/etc/sysctl.conf檔案

增加以下內容

vi /etc/sysctl.conf

|

# for oracle fs.aio-max-nr = 1048576 fs.file-max = 6815744 kernel.shmall = 2097152 kernel.shmmax = 1054472192 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 net.ipv4.ip_local_port_range = 9000 65500 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048586

|

使修改引數立即生效:

/sbin/sysctl -p

1.2.8.4 修改/etc/security/limits.conf檔案

檢查nofile

|

ulimit -Sn ulimit -Hn |

檢查nproc

|

ulimit -Su ulimit -Hu |

檢查stack

|

ulimit -Ss ulimit -Hs |

修改OS使用者grid和oracle資源限制:

|

cp /etc/security/limits.conf /etc/security/limits.conf.`date +%Y%m%d` echo "grid soft nofile 1024 grid hard nofile 65536 grid soft stack 10240 grid hard stack 32768 grid soft nproc 2047 grid hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536 oracle soft stack 10240 oracle hard stack 32768 oracle soft nproc 2047 oracle hard nproc 16384 root soft nproc 2047 " >> /etc/security/limits.conf |

1.2.8.5 修改/etc/pam.d/login檔案

|

echo "session required pam_limits.so" >> /etc/pam.d/login |

more /etc/pam.d/login

1.2.8.6 修改/etc/profile檔案

vi /etc/profile

|

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then if [ $SHELL = "/bin/ksh" ]; then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi umask 022 fi |

1.2.9 配置/dev/shm大小

vi /etc/fstab

|

tmpfs /dev/shm tmpfs defaults,size=2G 0 0

mount -o remount /dev/shm |

|

[root@raclhr-12cR1-N2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.5G 53% / tmpfs 1000M 72K 1000M 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 573M 2.3G 20% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home /dev/mapper/vg_orasoft-lv_orasoft_u01 20G 6.8G 12G 37% /u01 .host:/ 331G 272G 59G 83% /mnt/hgfs /dev/mapper/vg_orasoft-lv_orasoft_soft 20G 172M 19G 1% /soft [root@raclhr-12cR1-N2 ~]# more /etc/fstab # # /etc/fstab # Created by anaconda on Sat Jan 14 18:56:24 2017 # # Accessible filesystems, by reference, are maintained under '/dev/disk' # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info # /dev/mapper/vg_rootlhr-Vol00 / ext4 defaults 1 1 UUID=fccf51c1-2d2f-4152-baac-99ead8cfbc1a /boot ext4 defaults 1 2 /dev/mapper/vg_rootlhr-Vol01 /tmp ext4 defaults 1 2 /dev/mapper/vg_rootlhr-Vol02 swap swap defaults 0 0 tmpfs /dev/shm tmpfs defaults,size=2G 0 0 devpts /dev/pts devpts gid=5,mode=620 0 0 sysfs /sys sysfs defaults 0 0 proc /proc proc defaults 0 0 /dev/vg_rootlhr/Vol03 /home ext4 defaults 0 0 /dev/vg_orasoft/lv_orasoft_u01 /u01 ext4 defaults 0 0 [root@raclhr-12cR1-N2 ~]# mount -o remount /dev/shm [root@raclhr-12cR1-N2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.5G 53% / tmpfs 2.0G 72K 2.0G 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 573M 2.3G 20% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home /dev/mapper/vg_orasoft-lv_orasoft_u01 20G 6.8G 12G 37% /u01 .host:/ 331G 272G 59G 83% /mnt/hgfs /dev/mapper/vg_orasoft-lv_orasoft_soft 20G 172M 19G 1% /soft

|

1.2.10 配置NTP

Network Time Protocol Setting

● You have two options for time synchronization: an operating system configured network time protocol (NTP), or Oracle Cluster Time Synchronization Service.

● Oracle Cluster Time Synchronization Service is designed for organizations whose cluster servers are unable to access NTP services.

● If you use NTP, then the Oracle Cluster Time Synchronization daemon (ctssd) starts up in observer mode. If you do not have NTP daemons, then ctssd starts up in active mode and synchronizes time among cluster members without contacting an external time server..

可以採用作業系統的NTP服務,也可以使用Oracle自帶的服務ctss,如果ntp沒有啟用,Oracle會自動啟用自己的ctssd程式。

從oracle 11gR2 RAC開始使用Cluster Time Synchronization Service(CTSS)同步各節點的時間,當安裝程式發現NTP協議處於非活動狀態時,安裝叢集時間同步服務將以活動模式自動進行安裝並透過所有節點的時間。如果發現配置了 NTP,則以觀察者模式啟動叢集時間同步服務,Oracle Clusterware 不會在叢集中進行活動的時間同步。

root 使用者雙節點執行:

|

/sbin/service ntpd stop mv /etc/ntp.conf /etc/ntp.conf.bak service ntpd status chkconfig ntpd off |

1.3 配置共享儲存

這個是重點,也是最容易出錯的地方,這次是小麥苗第二次虛擬機器上安裝RAC環境,有的內容不再詳述。

1.3.1 新增共享磁碟

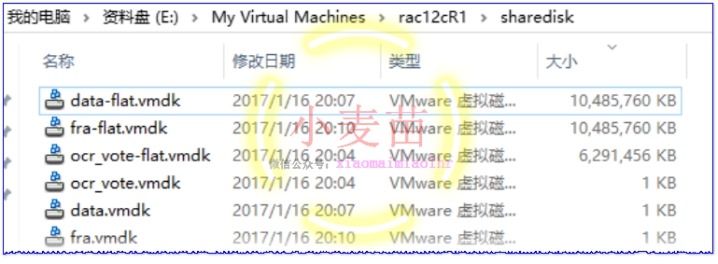

1.3.1.1 第一步:建立共享磁碟

該步驟可以用cmd命令也可以用圖形介面,本文采用命令列進行新增。

在cmd 中進入 WMware Workstation安裝目錄,執行命令建立磁碟:

|

C: cd C:\Program Files (x86)\VMware\VMware Workstation vmware-vdiskmanager.exe -c -s 6g -a lsilogic -t 2 "E:\My Virtual Machines\rac12cR1\sharedisk\ocr_vote.vmdk" vmware-vdiskmanager.exe -c -s 10g -a lsilogic -t 2 "E:\My Virtual Machines\rac12cR1\sharedisk\data.vmdk" vmware-vdiskmanager.exe -c -s 10g -a lsilogic -t 2 "E:\My Virtual Machines\rac12cR1\sharedisk\fra.vmdk"

|

|

D:\Users\xiaomaimiao>C:

C:\>cd C:\Program Files (x86)\VMware\VMware Workstation C:\Program Files (x86)\VMware\VMware Workstation>vmware-vdiskmanager.exe -c -s 6g -a lsilogic -t 2 "E:\My Virtual Machines\rac12cR1\sharedisk\ocr_vote.vmdk" Creating disk 'E:\My Virtual Machines\rac12cR1\sharedisk\ocr_vote.vmdk' Create: 100% done. Virtual disk creation successful.

C:\Program Files (x86)\VMware\VMware Workstation>vmware-vdiskmanager.exe -c -s 10g -a lsilogic -t 2 "E:\My Virtual Machines\rac12cR1\sharedisk\data.vmdk" Creating disk 'E:\My Virtual Machines\rac12cR1\sharedisk\data.vmdk' Create: 100% done. Virtual disk creation successful.

C:\Program Files (x86)\VMware\VMware Workstation>vmware-vdiskmanager.exe -c -s 10g -a lsilogic -t 2 "E:\My Virtual Machines\rac12cR1\sharedisk\fra.vmdk" Creating disk 'E:\My Virtual Machines\rac12cR1\sharedisk\fra.vmdk' Create: 100% done. Virtual disk creation successful.

|

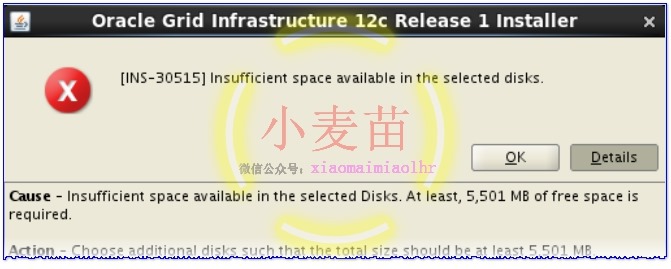

注意:12c R1的OCR磁碟組最少需要5501MB的空間。

|

[INS-30515] Insufficient space available in the selected disks. Cause - Insufficient space available in the selected Disks. At least, 5,501 MB of free space is required. Action - Choose additional disks such that the total size should be at least 5,501 MB. |

1.3.1.2 第二步:修改配置檔案

關閉兩臺虛擬機器,用記事本開啟 虛擬機器名字.vmx,即開啟配置檔案,2個節點都需要修改。

新增以下內容,其中,scsix:y 表示第x個匯流排上的第y個裝置:

|

#shared disks configure disk.EnableUUID="TRUE" disk.locking = "FALSE" scsi1.shared = "TRUE" diskLib.dataCacheMaxSize = "0" diskLib.dataCacheMaxReadAheadSize = "0" diskLib.dataCacheMinReadAheadSize = "0" diskLib.dataCachePageSize= "4096" diskLib.maxUnsyncedWrites = "0"

scsi1.present = "TRUE" scsi1.virtualDev = "lsilogic" scsil.sharedBus = "VIRTUAL" scsi1:0.present = "TRUE" scsi1:0.mode = "independent-persistent" scsi1:0.fileName = "..\sharedisk\ocr_vote.vmdk" scsi1:0.deviceType = "disk" scsi1:0.redo = "" scsi1:1.present = "TRUE" scsi1:1.mode = "independent-persistent" scsi1:1.fileName = "..\sharedisk\data.vmdk" scsi1:1.deviceType = "disk" scsi1:1.redo = "" scsi1:2.present = "TRUE" scsi1:2.mode = "independent-persistent" scsi1:2.fileName = "..\sharedisk\fra.vmdk" scsi1:2.deviceType = "disk" scsi1:2.redo = ""

|

如果報有的引數不存在的錯誤,那麼請將虛擬機器的相容性設定到Workstation 9.0

1.3.1.3 第三步:重新開啟VMware Workstation

關閉 VMware Workstation 軟體重新開啟,此時看到共享磁碟正確載入則配置正確,這裡尤其注意第二個節點,2個節點的硬碟配置和網路介面卡的配置應該是一樣的,若不一樣請檢查配置。

然後開啟2臺虛擬機器。

|

[root@raclhr-12cR1-N1 ~]# fdisk -l | grep /dev/sd Disk /dev/sda: 21.5 GB, 21474836480 bytes /dev/sda1 * 1 26 204800 83 Linux /dev/sda2 26 1332 10485760 8e Linux LVM /dev/sda3 1332 2611 10279936 8e Linux LVM Disk /dev/sdb: 107.4 GB, 107374182400 bytes /dev/sdb1 1 1306 10485760 8e Linux LVM /dev/sdb2 1306 2611 10485760 8e Linux LVM /dev/sdb3 2611 3917 10485760 8e Linux LVM /dev/sdb4 3917 13055 73399296 5 Extended /dev/sdb5 3917 5222 10485760 8e Linux LVM /dev/sdb6 5223 6528 10485760 8e Linux LVM /dev/sdb7 6528 7834 10485760 8e Linux LVM /dev/sdb8 7834 9139 10485760 8e Linux LVM /dev/sdb9 9139 10445 10485760 8e Linux LVM /dev/sdb10 10445 11750 10485760 8e Linux LVM /dev/sdb11 11750 13055 10477568 8e Linux LVM Disk /dev/sdc: 6442 MB, 6442450944 bytes Disk /dev/sdd: 10.7 GB, 10737418240 bytes Disk /dev/sde: 10.7 GB, 10737418240 bytes [root@raclhr-12cR1-N1 ~]# fdisk -l | grep "Disk /dev/sd" Disk /dev/sda: 21.5 GB, 21474836480 bytes Disk /dev/sdb: 107.4 GB, 107374182400 bytes Disk /dev/sdc: 6442 MB, 6442450944 bytes Disk /dev/sdd: 10.7 GB, 10737418240 bytes Disk /dev/sde: 10.7 GB, 10737418240 bytes [root@raclhr-12cR1-N1 ~]#

|

1.3.2 設定共享磁碟

1.3.2.1 配置udev繫結的scsi_id

注意以下兩點:

● 首先切換到root使用者下

● 2個節點上獲取的uuid應該是一樣的,不一樣的話說明之前的配置有問題

1、不同的作業系統,scsi_id命令的位置不同。

|

[root@raclhr-12cR1-N1 ~]# cat /etc/issue Red Hat Enterprise Linux Server release 6.5 (Santiago) Kernel \r on an \m

[root@raclhr-12cR1-N1 ~]# which scsi_id /sbin/scsi_id [root@raclhr-12cR1-N1 ~]#

|

注意:rhel 6之後只支援 --whitelisted --replace-whitespace 引數,之前的 -g -u -s 引數已經不支援了。

2、編輯 /etc/scsi_id.config 檔案,如果該檔案不存在,則建立該檔案並新增如下行:

|

[root@raclhr-12cR1-N1 ~]# echo "options=--whitelisted --replace-whitespace" > /etc/scsi_id.config [root@raclhr-12cR1-N1 ~]# more /etc/scsi_id.config options=--whitelisted --replace-whitespace [root@raclhr-12cR1-N1 ~]# |

3、獲取uuid

|

scsi_id --whitelisted --replace-whitespace --device=/dev/sdc scsi_id --whitelisted --replace-whitespace --device=/dev/sdd scsi_id --whitelisted --replace-whitespace --device=/dev/sde |

|

[root@raclhr-12cR1-N1 ~]# scsi_id --whitelisted --replace-whitespace --device=/dev/sdc 36000c29fd84cfe0767838541518ef8fe [root@raclhr-12cR1-N1 ~]# scsi_id --whitelisted --replace-whitespace --device=/dev/sdd 36000c29c0ac0339f4b5282b47c49285b [root@raclhr-12cR1-N1 ~]# scsi_id --whitelisted --replace-whitespace --device=/dev/sde 36000c29e4652a45192e32863956c1965

|

2個節點獲取到的值應該是一樣的。

1.3.2.2 建立並配置udev rules檔案

直接執行如下的指令碼:

|

mv /etc/udev/rules.d/99-oracle-asmdevices.rules /etc/udev/rules.d/99-oracle-asmdevices.rules_bk for i in c d e ; do echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\",RESULT==\"`scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\",NAME=\"asm-disk$i\",OWNER=\"grid\",GROUP=\"asmadmin\",MODE=\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules done start_udev

|

或使用如下的程式碼分步執行獲取:

|

for i in c d e ; do echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\",RESULT==\"`scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\",NAME=\"asm-disk$i\",OWNER=\"grid\",GROUP=\"asmadmin\",MODE=\"0660\"" done |

|

[root@raclhr-12cR1-N1 ~]# for i in c d e ; > do > echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\",RESULT==\"`scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\",NAME=\"asm-disk$i\",OWNER=\"grid\",GROUP=\"asmadmin\",MODE=\"0660\"" > done KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name",RESULT=="36000c29fd84cfe0767838541518ef8fe",NAME="asm-diskc",OWNER="grid",GROUP="asmadmin",MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name",RESULT=="36000c29c0ac0339f4b5282b47c49285b",NAME="asm-diskd",OWNER="grid",GROUP="asmadmin",MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name",RESULT=="36000c29e4652a45192e32863956c1965",NAME="asm-diske",OWNER="grid",GROUP="asmadmin",MODE="0660" [root@raclhr-12cR1-N1 ~]#

|

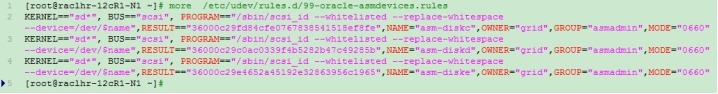

編輯vi /etc/udev/rules.d/99-oracle-asmdevices.rules,加入上邊的指令碼生成的內容。

這裡需要注意,一個KERNEL就是一行,不能換行的。

檢視是否配置結果:

[root@raclhr-12cR1-N1 ~]# ll /dev/asm*

ls: cannot access /dev/asm*: No such file or directory

[root@raclhr-12cR1-N1 ~]# start_udev

Starting udev: [ OK ]

[root@raclhr-12cR1-N1 ~]# ll /dev/asm*

brw-rw---- 1 grid asmadmin 8, 32 Jan 16 16:17 /dev/asm-diskc

brw-rw---- 1 grid asmadmin 8, 48 Jan 16 16:17 /dev/asm-diskd

brw-rw---- 1 grid asmadmin 8, 64 Jan 16 16:17 /dev/asm-diske

[root@raclhr-12cR1-N1 ~]#

重啟服務:

|

/sbin/udevcontrol reload_rules /sbin/start_udev |

檢查:

|

udevadm info --query=all --name=asm-diskc udevadm info --query=all --name=asm-diskd udevadm info --query=all --name=asm-diske |

整個執行過程:

|

[root@raclhr-12cR1-N1 ~]# fdisk -l | grep "Disk /dev/sd" Disk /dev/sda: 21.5 GB, 21474836480 bytes Disk /dev/sdb: 107.4 GB, 107374182400 bytes Disk /dev/sdc: 6442 MB, 6442450944 bytes Disk /dev/sdd: 10.7 GB, 10737418240 bytes Disk /dev/sde: 10.7 GB, 10737418240 bytes [root@raclhr-12cR1-N1 ~]# mv /etc/udev/rules.d/99-oracle-asmdevices.rules /etc/udev/rules.d/99-oracle-asmdevices.rules_bk [root@raclhr-12cR1-N1 ~]# for i in c d e ; > do > echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\",RESULT==\"`scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\",NAME=\"asm-disk$i\",OWNER=\"grid\",GROUP=\"asmadmin\",MODE=\"0660\"" >> /etc/udev/rules.d/99-oracle-asmdevices.rules > done [root@raclhr-12cR1-N1 ~]# more /etc/udev/rules.d/99-oracle-asmdevices.rules KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name",RESULT=="36000c29fd84cfe0767838541518ef8fe",NAME="asm-diskc",OWNER="grid",GROUP="asmadmin",MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name",RESULT=="36000c29c0ac0339f4b5282b47c49285b",NAME="asm-diskd",OWNER="grid",GROUP="asmadmin",MODE="0660" KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name",RESULT=="36000c29e4652a45192e32863956c1965",NAME="asm-diske",OWNER="grid",GROUP="asmadmin",MODE="0660" [root@raclhr-12cR1-N1 ~]# [root@raclhr-12cR1-N1 ~]# fdisk -l | grep "Disk /dev/sd" Disk /dev/sda: 21.5 GB, 21474836480 bytes Disk /dev/sdb: 107.4 GB, 107374182400 bytes Disk /dev/sdc: 6442 MB, 6442450944 bytes Disk /dev/sdd: 10.7 GB, 10737418240 bytes Disk /dev/sde: 10.7 GB, 10737418240 bytes [root@raclhr-12cR1-N1 ~]# start_udev Starting udev: [ OK ] [root@raclhr-12cR1-N1 ~]# fdisk -l | grep "Disk /dev/sd" Disk /dev/sda: 21.5 GB, 21474836480 bytes Disk /dev/sdb: 107.4 GB, 107374182400 bytes [root@raclhr-12cR1-N1 ~]# |

1.4 軟體包準備

1.4.1 建立檔案系統

在節點1建立檔案系統/soft,準備20G的空間用作Oracle和grid的軟體解壓目錄。

|

vgextend vg_orasoft /dev/sdb5 /dev/sdb6 lvcreate -n lv_orasoft_soft -L 20G vg_orasoft mkfs.ext4 /dev/vg_orasoft/lv_orasoft_soft mkdir /soft mount /dev/vg_orasoft/lv_orasoft_soft /soft

|

|

[root@raclhr-12cR1-N2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.5G 52% / tmpfs 1000M 72K 1000M 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 70M 2.8G 3% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home /dev/mapper/vg_orasoft-lv_orasoft_u01 20G 172M 19G 1% /u01 .host:/ 331G 234G 97G 71% /mnt/hgfs /dev/mapper/vg_orasoft-lv_orasoft_soft 20G 172M 19G 1% /soft [root@raclhr-12cR1-N2 ~]#

|

1.4.2 上傳並解壓軟體

開啟SecureFX軟體:

複製貼上檔案到/soft目錄下並等待上傳完成:

|

[root@raclhr-12cR1-N1 ~]# ll -h /soft/p* -rw-r--r-- 1 root root 1.6G Jan 14 03:28 /soft/p17694377_121020_Linux-x86-64_1of8.zip -rw-r--r-- 1 root root 968M Jan 14 03:19 /soft/p17694377_121020_Linux-x86-64_2of8.zip -rw-r--r-- 1 root root 1.7G Jan 14 03:47 /soft/p17694377_121020_Linux-x86-64_3of8.zip -rw-r--r-- 1 root root 617M Jan 14 03:00 /soft/p17694377_121020_Linux-x86-64_4of8.zip [root@raclhr-12cR1-N1 ~]# |

開2個視窗分別執行如下命令進行解壓安裝包:

|

unzip /soft/p17694377_121020_Linux-x86-64_1of8.zip -d /soft/ && unzip /soft/p17694377_121020_Linux-x86-64_2of8.zip -d /soft/ unzip /soft/p17694377_121020_Linux-x86-64_3of8.zip -d /soft/ && unzip /soft/p17694377_121020_Linux-x86-64_4of8.zip -d /soft/ |

1和2是database安裝包,3和4是grid的安裝包。

解壓完成後:

|

[root@raclhr-12cR1-N1 ~]# cd /soft [root@raclhr-12cR1-N1 soft]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_rootlhr-Vol00 9.9G 4.9G 4.5G 52% / tmpfs 1000M 72K 1000M 1% /dev/shm /dev/sda1 194M 35M 150M 19% /boot /dev/mapper/vg_rootlhr-Vol01 3.0G 70M 2.8G 3% /tmp /dev/mapper/vg_rootlhr-Vol03 3.0G 69M 2.8G 3% /home /dev/mapper/vg_orasoft-lv_orasoft_u01 20G 172M 19G 1% /u01 .host:/ 331G 234G 97G 71% /mnt/hgfs /dev/mapper/vg_orasoft-lv_orasoft_soft 20G 11G 8.6G 54% /soft [root@raclhr-12cR1-N1 soft]# du -sh ./* 2.8G ./database 2.5G ./grid 16K ./lost+found 1.6G ./p17694377_121020_Linux-x86-64_1of8.zip 968M ./p17694377_121020_Linux-x86-64_2of8.zip 1.7G ./p17694377_121020_Linux-x86-64_3of8.zip 618M ./p17694377_121020_Linux-x86-64_4of8.zip [root@raclhr-12cR1-N1 soft]#

|

1.5 安裝前預檢查

1.5.1 安裝補丁包(cvuqdisk)

在安裝12cR1 GRID RAC之前,經常會需要執行叢集驗證工具CVU(Cluster Verification Utility),該工具執行系統檢查,確認當前的配置是否滿足要求。

首先判斷是否安裝了cvuqdisk包:

|

rpm -qa cvuqdisk |

如果沒有安裝,那麼在2個節點上都執行如下命令進行安裝該包:

|

export CVUQDISK_GRP=oinstall cd /soft/grid/rpm/ rpm -ivh cvuqdisk-1.0.9-1.rpm |

|

[root@raclhr-12cR1-N1 soft]# cd /soft/grid/ [root@raclhr-12cR1-N1 grid]# ll total 80 drwxr-xr-x 4 root root 4096 Jan 16 17:04 install -rwxr-xr-x 1 root root 34132 Jul 11 2014 readme.html drwxrwxr-x 2 root root 4096 Jul 7 2014 response drwxr-xr-x 2 root root 4096 Jul 7 2014 rpm -rwxr-xr-x 1 root root 5085 Dec 20 2013 runcluvfy.sh -rwxr-xr-x 1 root root 8534 Jul 7 2014 runInstaller drwxrwxr-x 2 root root 4096 Jul 7 2014 sshsetup drwxr-xr-x 14 root root 4096 Jul 7 2014 stage -rwxr-xr-x 1 root root 500 Feb 7 2013 welcome.html [root@raclhr-12cR1-N1 grid]# cd rpm [root@raclhr-12cR1-N1 rpm]# ll total 12 -rwxr-xr-x 1 root root 8976 Jul 1 2014 cvuqdisk-1.0.9-1.rpm [root@raclhr-12cR1-N1 rpm]# export CVUQDISK_GRP=oinstall [root@raclhr-12cR1-N1 rpm]# cd /soft/grid/rpm/ [root@raclhr-12cR1-N1 rpm]# rpm -ivh cvuqdisk-1.0.9-1.rpm Preparing... ########################################### [100%] 1:cvuqdisk ########################################### [100%] [root@raclhr-12cR1-N1 rpm]# rpm -qa cvuqdisk cvuqdisk-1.0.9-1.x86_64 [root@raclhr-12cR1-N1 rpm]# [root@raclhr-12cR1-N1 sshsetup]# ls -l /usr/sbin/cvuqdisk -rwsr-xr-x 1 root oinstall 11920 Jul 1 2014 /usr/sbin/cvuqdisk [root@raclhr-12cR1-N1 sshsetup]#

|

傳輸到第2個節點上進行安裝:

|

scp cvuqdisk-1.0.9-1.rpm root@192.168.59.161:/tmp export CVUQDISK_GRP=oinstall rpm -ivh /tmp/cvuqdisk-1.0.9-1.rpm |

1.5.2 配SSH互信,建立ssh等效性

所謂使用者等價,就是以Oracle使用者從一個節點連線到另一個節點時,不需要輸入密碼。Oracle GI和DB的安裝過程都是先在一個節點安裝,然後安裝程式自動把本地安裝好的內容複製到遠端相同的目錄下,這是一個後臺複製過程,使用者沒有機會輸入密碼驗證身份,必須進行配置。

雖然在安裝軟體的過程中,Oracle會自動配置SSH對等性,不過還是建議在安裝軟體之前手工配置。

為ssh和scp建立連線,檢驗是否存在:

ls -l /usr/local/bin/ssh

ls -l /usr/local/bin/scp

不存在則建立:

|

/bin/ln -s /usr/bin/ssh /usr/local/bin/ssh /bin/ln -s /usr/bin/scp /usr/local/bin/scp |

另外需要說明的是,配置了ssh後也經常有連線拒絕的情況,多數情況下是由於/etc/ssh/ssh_config、/etc/hosts.allow和/etc/hosts.deny這3個檔案的問題。

1、/etc/ssh/ssh_config檔案中加入GRID及Oracle使用者所在的組:

|

AllowGroups sysadmin asmdba oinstall |

2、修改vi /etc/hosts.deny檔案,用#註釋掉sshd:ALL,或者加入ssh:ALL EXCEPT 2個節點的公網及2個節點的私網,中間用逗號隔開,如:

|

ssd : ALL EXCEPT 192.168.59.128,192.168.59.129,10.10.10.5,10.10.10.6 |

也可以修改:/etc/hosts.allow檔案,加入sshd:ALL或

|

sshd:192.168.59.128,192.168.59.129,10.10.10.5,10.10.10.6 |

若2個檔案的配置有衝突以/etc/hosts.deny為準。

3、重啟ssd服務:/etc/init.d/sshd restart

1.5.2.1 方法一:普通配置

分別配置grid和oracle使用者的ssh

----------------------------------------------------------------------------------

|

[oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB1 date [oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB2 date [oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB1-priv date [oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB2-priv date

[oracle@ZFLHRDB2 ~]$ ssh ZFLHRDB1 date [oracle@ZFLHRDB2 ~]$ ssh ZFLHRDB2 date [oracle@ZFLHRDB2 ~]$ ssh ZFLHRDB1-priv date [oracle@ZFLHRDB2 ~]$ ssh ZFLHRDB2-priv date |

-----------------------------------------------------------------------------------

|

[oracle@ZFLHRDB1 ~]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys [oracle@ZFLHRDB1 ~]$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys [oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys ->輸入ZFLHRDB2密碼 [oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB2 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys ->輸入ZFLHRDB2密碼 [oracle@ZFLHRDB1 ~]$ scp ~/.ssh/authorized_keys ZFLHRDB2:~/.ssh/authorized_keys ->輸入ZFLHRDB2密碼

|

-----------------------------------------------------------------------------------

測試兩節點連通性:

|

[oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB1 date [oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB2 date [oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB1-priv date [oracle@ZFLHRDB1 ~]$ ssh ZFLHRDB2-priv date

[oracle@ZFLHRDB2 ~]$ ssh ZFLHRDB1 date [oracle@ZFLHRDB2 ~]$ ssh ZFLHRDB2 date [oracle@ZFLHRDB2 ~]$ ssh ZFLHRDB1-priv date [oracle@ZFLHRDB2 ~]$ ssh ZFLHRDB2-priv date

|

第二次執行時不再提示輸入口令,並且可以成功執行命令,則表示SSH對等性配置成功。

1.5.2.2 方法二:用sshUserSetup.sh快速建立互信(推薦方法)

sshUserSetup.sh在GI安裝介質解壓縮後的sshsetup目錄下。下面兩條命令在一個節點上執行即可,在root使用者下執行:

|

./sshUserSetup.sh -user grid -hosts "raclhr-12cR1-N2 raclhr-12cR1-N1" -advanced exverify –confirm ./sshUserSetup.sh -user oracle -hosts "raclhr-12cR1-N2 raclhr-12cR1-N1" -advanced exverify -confirm |

輸入yes及密碼一路回車即可。

|

[oracle@raclhr-12cR1-N1 grid]$ ll total 80 drwxr-xr-x 4 root root 4096 Jan 16 17:04 install -rwxr-xr-x 1 root root 34132 Jul 11 2014 readme.html drwxrwxr-x 2 root root 4096 Jul 7 2014 response drwxr-xr-x 2 root root 4096 Jul 7 2014 rpm -rwxr-xr-x 1 root root 5085 Dec 20 2013 runcluvfy.sh -rwxr-xr-x 1 root root 8534 Jul 7 2014 runInstaller drwxrwxr-x 2 root root 4096 Jul 7 2014 sshsetup drwxr-xr-x 14 root root 4096 Jul 7 2014 stage -rwxr-xr-x 1 root root 500 Feb 7 2013 welcome.html [oracle@raclhr-12cR1-N1 grid]$ cd sshsetup/ [oracle@raclhr-12cR1-N1 sshsetup]$ ll total 32 -rwxr-xr-x 1 root root 32334 Jun 7 2013 sshUserSetup.sh [oracle@raclhr-12cR1-N1 sshsetup]$ pwd /soft/grid/sshsetup |

|

ssh raclhr-12cR1-N1 date ssh raclhr-12cR1-N2 date ssh raclhr-12cR1-N1-priv date ssh raclhr-12cR1-N2-priv date ssh-agent $SHELL ssh-add

|

1.5.3 cluster 硬體檢測--安裝前預檢查配置資訊

Use Cluster Verification Utility (cvu)

Before installing Oracle Clusterware, use CVU to ensure that your cluster is prepared for an installation:

Oracle provides CVU to perform system checks in preparation for an installation, patch updates, or other system changes. In addition, CVU can generate fixup scripts that can change many kernel parameters to at lease the minimum settings required for a successful installation.

Using CVU can help system administrators, storage administrators, and DBA to ensure that everyone has completed the system configuration and preinstallation steps.

./runcluvfy.sh -help

./runcluvfy.sh stage -pre crsinst -n rac1,rac2 –fixup -verbose

Install the operating system package cvuqdisk to both Oracle RAC nodes. Without cvuqdisk, Cluster Verification Utility cannot discover shared disks, and you will receive the error message "Package cvuqdisk not installed" when the Cluster Verification Utility is run (either manually or at the end of the Oracle grid infrastructure installation). Use the cvuqdisk RPM for your hardware architecture (for example, x86_64 or i386). The cvuqdisk RPM can be found on the Oracle grid infrastructure installation media in the rpm directory. For the purpose of this article, the Oracle grid infrastructure media was extracted to the /home/grid/software/oracle/grid directory on racnode1 as the grid user.

只需要在其中一個節點上執行即可

在安裝 GRID 之前,建議先利用 CVU(Cluster Verification Utility)檢查 CRS 的安裝前環境。以grid使用者執行:

|

export CVUQDISK_GRP=oinstall ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -fixup -verbose $ORACLE_HOME/bin/cluvfy stage -pre crsinst -n all -verbose -fixup |

未檢測透過的顯示為failed,有的failed可以根據提供的指令碼進行修復。有的需要根據情況進行修復,有的failed也可以忽略。

|

[root@raclhr-12cR1-N1 grid]# su - grid [grid@raclhr-12cR1-N1 ~]$ cd /soft/grid/ [grid@raclhr-12cR1-N1 grid]$ ./runcluvfy.sh stage -pre crsinst -n raclhr-12cR1-N1,raclhr-12cR1-N2 -fixup -verbose |

小麥苗的環境有如下3個failed:

|

Check: Total memory Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- raclhr-12cr1-n2 1.9518GB (2046592.0KB) 4GB (4194304.0KB) failed raclhr-12cr1-n1 1.9518GB (2046592.0KB) 4GB (4194304.0KB) failed Result: Total memory check failed

Check: Swap space Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- raclhr-12cr1-n2 2GB (2097144.0KB) 2.9277GB (3069888.0KB) failed raclhr-12cr1-n1 2GB (2097144.0KB) 2.9277GB (3069888.0KB) failed Result: Swap space check failed

Checking integrity of file "/etc/resolv.conf" across nodes PRVF-5600 : On node "raclhr-12cr1-n2" The following lines in file "/etc/resolv.conf" could not be parsed as they are not in proper format: raclhr-12cr1-n2 PRVF-5600 : On node "raclhr-12cr1-n1" The following lines in file "/etc/resolv.conf" could not be parsed as they are not in proper format: raclhr-12cr1-n1 Check for integrity of file "/etc/resolv.conf" failed

|

都可以忽略。

About Me

...............................................................................................................................

● 本文作者:小麥苗,只專注於資料庫的技術,更注重技術的運用

● 本文在itpub(http://blog.itpub.net/26736162)、部落格園(http://www.cnblogs.com/lhrbest)和個人微信公眾號(xiaomaimiaolhr)上有同步更新

● 本文itpub地址:http://blog.itpub.net/26736162/viewspace-2132768/

● 本文部落格園地址:http://www.cnblogs.com/lhrbest/p/6337496.html

● 本文pdf版及小麥苗雲盤地址:http://blog.itpub.net/26736162/viewspace-1624453/

● QQ群:230161599 微信群:私聊

● 聯絡我請加QQ好友(642808185),註明新增緣由

● 於 2017-01-12 08:00 ~ 2016-01-21 24:00 在農行完成

● 文章內容來源於小麥苗的學習筆記,部分整理自網路,若有侵權或不當之處還請諒解

● 版權所有,歡迎分享本文,轉載請保留出處

...............................................................................................................................

拿起手機使用微信客戶端掃描下邊的左邊圖片來關注小麥苗的微信公眾號:xiaomaimiaolhr,掃描右邊的二維碼加入小麥苗的QQ群,學習最實用的資料庫技術。

來自 “ ITPUB部落格 ” ,連結:http://blog.itpub.net/26736162/viewspace-2132768/,如需轉載,請註明出處,否則將追究法律責任。

相關文章

- Oracle 12cR1 RAC叢集安裝(一)--環境準備Oracle

- 使用VMware Workstation安裝 Linux虛擬環境Linux

- 安裝 VMware workstation

- 在 Linux 上安裝 VMware 工具Linux

- VMware Workstation解除安裝

- 安裝Vmware Workstation 16

- mac os電腦安裝tomact環境及配置Mac

- 在 VMware workstation 安裝 CentOS 虛擬機器CentOS虛擬機

- Linux 安裝 VMware Workstation ProLinux

- vmware workstation 下安裝ubuntuUbuntu

- 在Ubuntu上安裝Drone持續整合環境Ubuntu

- 在linux,windows上安裝rubyonrails開發環境LinuxWindowsAI開發環境

- Centos上安裝Node環境CentOS

- 在anlions os上安裝資料庫資料庫

- G018-CLOUD-HW-FC-01 VMWare WorkStation 上安裝 CNA 及 VRMCloudVR

- 通過ORACLE VM virtualbox環境安裝oracle 11G RAC(ASM)OracleASM

- 如何在 Ubuntu 安裝 VMware Workstation ?Ubuntu

- vmware workstation 14 pro安裝方法

- VMware Workstation 的安裝和使用

- VMware Workstation 17安裝教程:安裝系統

- 在Oracle Linux 7.1上安裝DockerOracleLinuxDocker

- 防火牆在RAC上的配置防火牆

- Anaconda(conda)在windows安裝與環境配置Windows

- 如何判斷Linux系統安裝在VMware上?Linux

- 如何安裝vmware以及如何在vmware上安裝centOS 7.0CentOS

- VMware Workstation安裝+徹底解除安裝教程(Windows版)Windows

- VMware Workstation Pro15.0安裝圖解圖解

- 在FC5上安裝oracle 9204Oracle

- 在Windows電腦上安裝Webots機器人模擬環境WindowsWeb機器人

- jdk在linux下安裝、配置環境變數JDKLinux變數

- Python 開發環境搭建(01):vmware workstation 網路搭建Python開發環境

- 如何在PC上安裝Mac OSMac

- RedHat Advance Server上安裝Oracle 9204 RAC參考手冊(轉)RedhatServerOracle

- 在CentOS 7.5上安裝和配置ProFTPDCentOSFTP

- VMware Workstation Pro 17 怎麼設定上網?

- Xcode配置測試環境和線上環境XCode

- VMware Workstation17虛擬機器安裝虛擬機

- VMware Workstation Linux 安裝及橋接網路Linux橋接

- 如何在Ubuntu 20.04上安裝Unity桌面環境UbuntuUnity