深度學習與計算機視覺系列(9)_串一串神經網路之動手實現小例子

作者:寒小陽&&龍心塵

時間:2016年1月。

出處:

http://blog.csdn.net/han_xiaoyang/article/details/50521072

http://blog.csdn.net/longxinchen_ml/article/details/50521933

宣告:版權所有,轉載請聯絡作者並註明出處

1.引言

前面8小節,算從神經網路的結構、簡單原理、資料準備與處理、神經元選擇、損失函式選擇等方面把神經網路過了一遍。這個部分我們打算把知識點串一串,動手實現一個簡單的2維平面神經網路分類器,去分割平面上的不同類別樣本點。為了循序漸進,我們打算先實現一個簡單的線性分類器,然後再擴充到非線性的2層神經網路。我們可以看到簡單的淺層神經網路,在這個例子上就能夠有分割程度遠高於線性分類器的效果。

2.樣本資料的產生

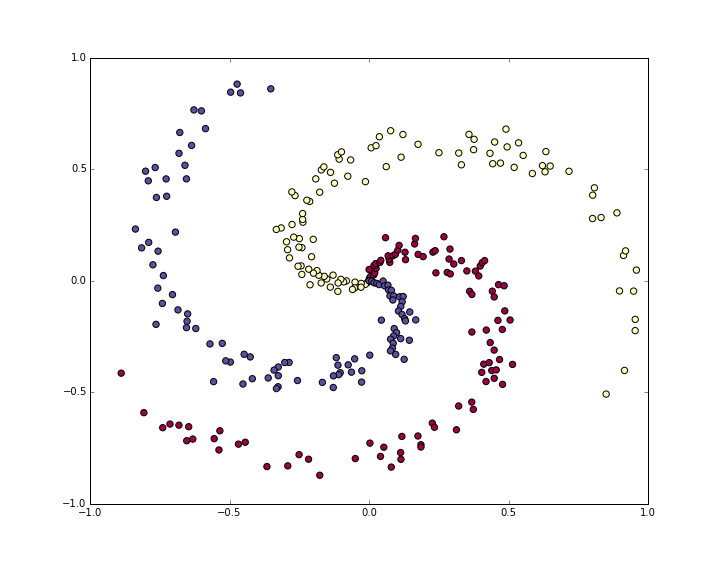

為了凸顯一下神經網路強大的空間分割能力,我們打算產生出一部分對於線性分類器不那麼容易分割的樣本點,比如說我們生成一份螺旋狀分佈的樣本點,如下:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;">N = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">100</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 每個類中的樣本點</span> D = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">2</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 維度</span> K = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">3</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 類別個數</span> X = np.zeros((N*K,D)) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 樣本input</span> y = np.zeros(N*K, dtype=<span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">'uint8'</span>) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 類別標籤</span> <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">for</span> j <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">in</span> xrange(K): ix = range(N*j,N*(j+<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>)) r = np.linspace(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.0</span>,<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,N) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># radius</span> t = np.linspace(j*<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">4</span>,(j+<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>)*<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">4</span>,N) + np.random.randn(N)*<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.2</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># theta</span> X[ix] = np.c_[r*np.sin(t), r*np.cos(t)] y[ix] = j <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 視覺化一下我們的樣本點</span> plt.scatter(X[:, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>], X[:, <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>], c=y, s=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">40</span>, cmap=plt.cm.Spectral)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li><li style="box-sizing: border-box; padding: 0px 5px;">7</li><li style="box-sizing: border-box; padding: 0px 5px;">8</li><li style="box-sizing: border-box; padding: 0px 5px;">9</li><li style="box-sizing: border-box; padding: 0px 5px;">10</li><li style="box-sizing: border-box; padding: 0px 5px;">11</li><li style="box-sizing: border-box; padding: 0px 5px;">12</li><li style="box-sizing: border-box; padding: 0px 5px;">13</li></ul>

得到如下的樣本分佈:

紫色,紅色和黃色分佈代表不同的3種類別。

一般來說,拿到資料都要做預處理,包括之前提到的去均值和方差歸一化。不過我們構造的資料幅度已經在-1到1之間了,所以這裡不用做這個操作。

3.使用Softmax線性分類器

3.1 初始化引數

我們先在訓練集上用softmax線性分類器試試。如我們在之前的章節提到的,我們這裡用的softmax分類器,使用的是一個線性的得分函式/score function,使用的損失函式是互熵損失/cross-entropy loss。包含的引數包括得分函式裡面用到的權重矩陣W和偏移量b,我們先隨機初始化這些引數。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#隨機初始化引數</span> <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">import</span> numpy <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">as</span> np <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#D=2表示維度,K=3表示類別數</span> W = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.01</span> * np.random.randn(D,K) b = np.zeros((<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,K))</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li></ul>

3.2 計算得分

線性的得分函式,將原始的資料對映到得分域非常簡單,只是一個直接的矩陣乘法。

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#使用得分函式計算得分</span> scores = np.dot(X, W) + b</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li></ul>

在我們給的這個例子中,我們有2個2維點集,所以做完乘法過後,矩陣得分scores其實是一個[300*3]的矩陣,每一行都給出對應3各類別(紫,紅,黃)的得分。

3.3 計算損失

然後我們就要開始使用我們的損失函式計算損失了,我們之前也提到過,損失函式計算出來的結果代表著預測結果和真實結果之間的吻合度,我們的目標是最小化這個結果。直觀一點理解,我們希望對每個樣本而言,對應正確類別的得分高於其他類別的得分,如果滿足這個條件,那麼損失函式計算的結果是一個比較低的值,如果判定的類別不是正確類別,則結果值會很高。我們之前提到了,softmax分類器裡面,使用的損失函式是互熵損失。一起回憶一下,假設f是得分向量,那麼我們的互熵損失是用如下的形式定義的:

直觀地理解一下上述形式,就是Softmax分類器把類別得分向量f中每個值都看成對應三個類別的log似然概率。因此我們在求每個類別對應概率的時候,使用指數函式還原它,然後歸一化。從上面形式裡面大家也可以看得出來,得到的值總是在0到1之間的,因此從某種程度上說我們可以把它理解成概率。如果判定類別是錯誤類別,那麼上述公式的結果就會趨於無窮,也就是說損失相當相當大,相反,如果判定類別正確,那麼損失就接近log(1)=0。這和我們直觀理解上要最小化損失是完全吻合的。

當然,當然,別忘了,完整的損失函式定義,一定會加上正則化項,也就是說,完整的損失L應該有如下的形式:

好,我們實現以下,根據上面計算得到的得分scores,我們計算以下各個類別上的概率:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 用指數函式還原</span> exp_scores = np.exp(scores) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 歸一化</span> probs = exp_scores / np.sum(exp_scores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li></ul>

在我們的例子中,我們最後得到了一個[300*3]的概率矩陣prob,其中每一行都包含屬於3個類別的概率。然後我們就可以計算完整的互熵損失了:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#計算log概率和互熵損失</span> corect_logprobs = -np.log(probs[range(num_examples),y]) data_loss = np.sum(corect_logprobs)/num_examples <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#加上正則化項</span> reg_loss = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.5</span>*reg*np.sum(W*W) loss = data_loss + reg_loss</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li></ul>

正則化強度λ在上述程式碼中是reg,最開始的時候我們可能會得到loss=1.1,是通過np.log(1.0/3)得到的(假定初始的時候屬於3個類別的概率一樣),我們現在想最小化損失loss

3.4 計算梯度與梯度回傳

我們能夠用損失函式評估預測值與真實值之間的差距,下一步要做的事情自然是最小化這個值。我們用傳統的梯度下降來解決這個問題。多解釋一句,梯度下降的過程是:我們先選取一組隨機引數作為初始值,然後計算損失函式在這組引數上的梯度(負梯度的方向表明了損失函式減小的方向),接著我們朝著負梯度的方向迭代和更新引數,不斷重複這個過程直至損失函式最小化。為了清楚一點說明這個問題,我們引入一箇中間變數p,它是歸一化後的概率向量,如下:

我們現在希望知道朝著哪個方向調整權重能夠減小損失,也就是說,我們需要計算梯度∂Li/∂fk。損失Li從p計算而來,再退一步,依賴於f。於是我們又要做高數題,使用鏈式求導法則了,不過梯度的結果倒是非常簡單:

解釋一下,公式的最後一個部分表示yi=k的時候,取值為1。整個公式其實非常的優雅和簡單。假設我們計算的概率p=[0.2, 0.3, 0.5],而中間的類別才是真實的結果類別。根據梯度求解公式,我們得到梯度df=[0.2,−0.7,0.5]。我們想想梯度的含義,其實這個結果是可解釋性非常高的:大家都知道,梯度是最快上升方向,我們減掉它乘以步長才會讓損失函式值減小。第1項和第3項(其實就是不正確的類別項)梯度為正,表明增加它們只會讓最後的損失/loss增大,而我們的目標是減小loss;中間的梯度項-0.7其實再告訴我們,增加這一項,能減小損失Li,達到我們最終的目的。

我們依舊記probs為所有樣本屬於各個類別的概率,記dscores為得分上的梯度,我們可以有以下的程式碼:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;">dscores = probs dscores[range(num_examples),y] -= <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span> dscores /= num_examples</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li></ul>

我們計算的得分scores = np.dot(X, W)+b,因為上面已經算好了scores的梯度dscores,我們現在可以回傳梯度計算W和b了:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;">dW = np.dot(X.T, dscores) db = np.sum(dscores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#得記著正則化梯度哈</span> dW += reg*W</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li></ul>

我們通過矩陣的乘法得到梯度部分,權重W的部分加上了正則化項的梯度。因為我們在設定正則化項的時候用了係數0.5(ddw(12λw2)=λw),因此直接用reg*W就可以表示出正則化的梯度部分。

3.5 引數迭代與更新

在得到所需的所有部分之後,我們就可以進行引數更新了:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#引數迭代更新</span> W += -step_size * dW b += -step_size * db</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li></ul>

3.6 大雜合:訓練SoftMax分類器

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#程式碼部分組一起,訓練線性分類器</span>

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#隨機初始化引數</span>

W = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.01</span> * np.random.randn(D,K)

b = np.zeros((<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,K))

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#需要自己敲定的步長和正則化係數</span>

step_size = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1e-0</span>

reg = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1e-3</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#正則化係數</span>

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#梯度下降迭代迴圈</span>

num_examples = X.shape[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>]

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">for</span> i <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">in</span> xrange(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">200</span>):

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 計算類別得分, 結果矩陣為[N x K]</span>

scores = np.dot(X, W) + b

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 計算類別概率</span>

exp_scores = np.exp(scores)

probs = exp_scores / np.sum(exp_scores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># [N x K]</span>

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 計算損失loss(包括互熵損失和正則化部分)</span>

corect_logprobs = -np.log(probs[range(num_examples),y])

data_loss = np.sum(corect_logprobs)/num_examples

reg_loss = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.5</span>*reg*np.sum(W*W)

loss = data_loss + reg_loss

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">if</span> i % <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">10</span> == <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>:

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">print</span> <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"iteration %d: loss %f"</span> % (i, loss)

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 計算得分上的梯度</span>

dscores = probs

dscores[range(num_examples),y] -= <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>

dscores /= num_examples

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 計算和回傳梯度</span>

dW = np.dot(X.T, dscores)

db = np.sum(dscores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>)

dW += reg*W <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 正則化梯度</span>

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#引數更新</span>

W += -step_size * dW

b += -step_size * db</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li><li style="box-sizing: border-box; padding: 0px 5px;">7</li><li style="box-sizing: border-box; padding: 0px 5px;">8</li><li style="box-sizing: border-box; padding: 0px 5px;">9</li><li style="box-sizing: border-box; padding: 0px 5px;">10</li><li style="box-sizing: border-box; padding: 0px 5px;">11</li><li style="box-sizing: border-box; padding: 0px 5px;">12</li><li style="box-sizing: border-box; padding: 0px 5px;">13</li><li style="box-sizing: border-box; padding: 0px 5px;">14</li><li style="box-sizing: border-box; padding: 0px 5px;">15</li><li style="box-sizing: border-box; padding: 0px 5px;">16</li><li style="box-sizing: border-box; padding: 0px 5px;">17</li><li style="box-sizing: border-box; padding: 0px 5px;">18</li><li style="box-sizing: border-box; padding: 0px 5px;">19</li><li style="box-sizing: border-box; padding: 0px 5px;">20</li><li style="box-sizing: border-box; padding: 0px 5px;">21</li><li style="box-sizing: border-box; padding: 0px 5px;">22</li><li style="box-sizing: border-box; padding: 0px 5px;">23</li><li style="box-sizing: border-box; padding: 0px 5px;">24</li><li style="box-sizing: border-box; padding: 0px 5px;">25</li><li style="box-sizing: border-box; padding: 0px 5px;">26</li><li style="box-sizing: border-box; padding: 0px 5px;">27</li><li style="box-sizing: border-box; padding: 0px 5px;">28</li><li style="box-sizing: border-box; padding: 0px 5px;">29</li><li style="box-sizing: border-box; padding: 0px 5px;">30</li><li style="box-sizing: border-box; padding: 0px 5px;">31</li><li style="box-sizing: border-box; padding: 0px 5px;">32</li><li style="box-sizing: border-box; padding: 0px 5px;">33</li><li style="box-sizing: border-box; padding: 0px 5px;">34</li><li style="box-sizing: border-box; padding: 0px 5px;">35</li><li style="box-sizing: border-box; padding: 0px 5px;">36</li><li style="box-sizing: border-box; padding: 0px 5px;">37</li><li style="box-sizing: border-box; padding: 0px 5px;">38</li><li style="box-sizing: border-box; padding: 0px 5px;">39</li><li style="box-sizing: border-box; padding: 0px 5px;">40</li><li style="box-sizing: border-box; padding: 0px 5px;">41</li><li style="box-sizing: border-box; padding: 0px 5px;">42</li><li style="box-sizing: border-box; padding: 0px 5px;">43</li></ul>

得到結果:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;">iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1.096956</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">10</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.917265</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">20</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.851503</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">30</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.822336</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">40</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.807586</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">50</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.799448</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">60</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.794681</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">70</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.791764</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">80</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.789920</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">90</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.788726</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">100</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.787938</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">110</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.787409</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">120</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.787049</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">130</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.786803</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">140</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.786633</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">150</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.786514</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">160</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.786431</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">170</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.786373</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">180</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.786331</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">190</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.786302</span></code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li><li style="box-sizing: border-box; padding: 0px 5px;">7</li><li style="box-sizing: border-box; padding: 0px 5px;">8</li><li style="box-sizing: border-box; padding: 0px 5px;">9</li><li style="box-sizing: border-box; padding: 0px 5px;">10</li><li style="box-sizing: border-box; padding: 0px 5px;">11</li><li style="box-sizing: border-box; padding: 0px 5px;">12</li><li style="box-sizing: border-box; padding: 0px 5px;">13</li><li style="box-sizing: border-box; padding: 0px 5px;">14</li><li style="box-sizing: border-box; padding: 0px 5px;">15</li><li style="box-sizing: border-box; padding: 0px 5px;">16</li><li style="box-sizing: border-box; padding: 0px 5px;">17</li><li style="box-sizing: border-box; padding: 0px 5px;">18</li><li style="box-sizing: border-box; padding: 0px 5px;">19</li><li style="box-sizing: border-box; padding: 0px 5px;">20</li></ul>

190次迴圈之後,結果大致收斂了。我們評估一下準確度:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#評估準確度</span> scores = np.dot(X, W) + b predicted_class = np.argmax(scores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>) <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">print</span> <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">'training accuracy: %.2f'</span> % (np.mean(predicted_class == y))</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li></ul>

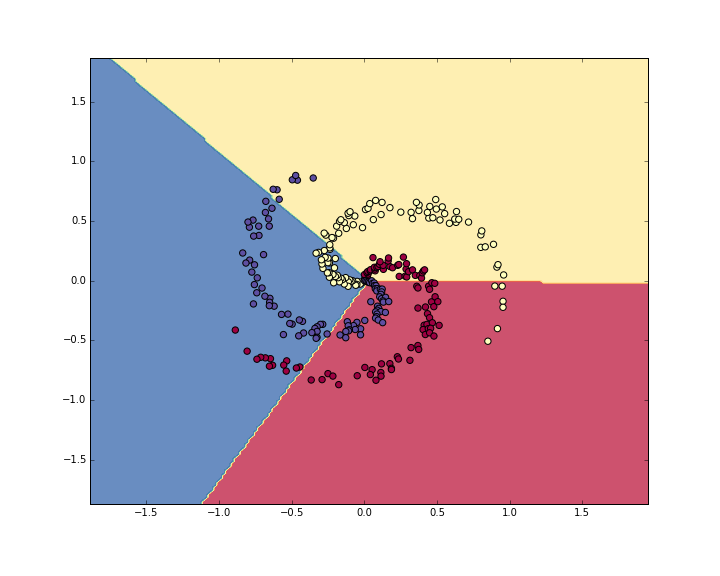

輸出結果為49%。不太好,對吧?實際上也是可理解的,你想想,一份螺旋形的資料,你偏執地要用一個線性分類器去分割,不管怎麼調整這個線性分類器,都非常非常困難。我們視覺化一下資料看看決策邊界(decision boundaries):

4.使用神經網路分類

從剛才的例子裡可以看出,一個線性分類器,在現在的資料集上效果並不好。我們知道神經網路可以做非線性的分割,那我們就試試神經網路,看看會不會有更好的效果。對於這樣一個簡單問題,我們用單隱藏層的神經網路就可以了,這樣一個神經網路我們需要2層的權重和偏移量:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 初始化引數</span> h = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">100</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 隱層大小(神經元個數)</span> W = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.01</span> * np.random.randn(D,h) b = np.zeros((<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,h)) W2 = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.01</span> * np.random.randn(h,K) b2 = np.zeros((<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,K))</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li></ul>

然後前向計算的過程也稍有一些變化:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#2層神經網路的前向計算</span> hidden_layer = np.maximum(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, np.dot(X, W) + b) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 用的 ReLU單元</span> scores = np.dot(hidden_layer, W2) + b2</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li></ul>

注意到這裡,和之前線性分類器中的得分計算相比,多了一行程式碼計算,我們首先計算第一層神經網路結果,然後作為第二層的輸入,計算最後的結果。哦,對了,程式碼裡大家也看的出來,我們這裡使用的是ReLU神經單元。

其他的東西都沒太大變化。我們依舊按照之前的方式去計算loss,然後計算梯度dscores。不過反向回傳梯度的過程形式上也有一些小小的變化。我們看下面的程式碼,可能覺得和Softmax分類器裡面看到的基本一樣,但注意到我們用hidden_layer替換掉了之前的X:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 梯度回傳與反向傳播</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 對W2和b2的第一次計算</span> dW2 = np.dot(hidden_layer.T, dscores) db2 = np.sum(dscores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li></ul>

恩,並沒有完事啊,因為hidden_layer本身是一個包含其他引數和資料的函式,我們得計算一下它的梯度:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;">dhidden = np.dot(dscores, W2.T)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li></ul>

現在我們有隱層輸出的梯度了,下一步我們要反向傳播到ReLU神經元了。不過這個計算非常簡單,因為r=max(0,x),同時我們又有drdx=1(x>0)。用鏈式法則串起來後,我們可以看到,回傳的梯度大於0的時候,經過ReLU之後,保持原樣;如果小於0,那本次回傳就到此結束了。因此,我們這一部分非常簡單:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#梯度回傳經過ReLU</span> dhidden[hidden_layer <= <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>] = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span></code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li></ul>

終於,翻山越嶺,回到第一層,拿到總的權重和偏移量的梯度:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;">dW = np.dot(X.T, dhidden) db = np.sum(dhidden, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>)</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li></ul>

來,來,來。組一組,我們把整個神經網路的過程串起來:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 隨機初始化引數</span>

h = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">100</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 隱層大小</span>

W = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.01</span> * np.random.randn(D,h)

b = np.zeros((<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,h))

W2 = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.01</span> * np.random.randn(h,K)

b2 = np.zeros((<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>,K))

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 手動敲定的幾個引數</span>

step_size = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1e-0</span>

reg = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1e-3</span> <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 正則化引數</span>

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 梯度迭代與迴圈</span>

num_examples = X.shape[<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>]

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">for</span> i <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">in</span> xrange(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">10000</span>):

hidden_layer = np.maximum(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, np.dot(X, W) + b) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#使用的ReLU神經元</span>

scores = np.dot(hidden_layer, W2) + b2

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 計算類別概率</span>

exp_scores = np.exp(scores)

probs = exp_scores / np.sum(exp_scores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>) <span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># [N x K]</span>

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 計算互熵損失與正則化項</span>

corect_logprobs = -np.log(probs[range(num_examples),y])

data_loss = np.sum(corect_logprobs)/num_examples

reg_loss = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.5</span>*reg*np.sum(W*W) + <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.5</span>*reg*np.sum(W2*W2)

loss = data_loss + reg_loss

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">if</span> i % <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1000</span> == <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>:

<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">print</span> <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">"iteration %d: loss %f"</span> % (i, loss)

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 計算梯度</span>

dscores = probs

dscores[range(num_examples),y] -= <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>

dscores /= num_examples

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 梯度回傳</span>

dW2 = np.dot(hidden_layer.T, dscores)

db2 = np.sum(dscores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>)

dhidden = np.dot(dscores, W2.T)

dhidden[hidden_layer <= <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>] = <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 拿到最後W,b上的梯度</span>

dW = np.dot(X.T, dhidden)

db = np.sum(dhidden, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, keepdims=<span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">True</span>)

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 加上正則化梯度部分</span>

dW2 += reg * W2

dW += reg * W

<span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;"># 引數迭代與更新</span>

W += -step_size * dW

b += -step_size * db

W2 += -step_size * dW2

b2 += -step_size * db2</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li><li style="box-sizing: border-box; padding: 0px 5px;">7</li><li style="box-sizing: border-box; padding: 0px 5px;">8</li><li style="box-sizing: border-box; padding: 0px 5px;">9</li><li style="box-sizing: border-box; padding: 0px 5px;">10</li><li style="box-sizing: border-box; padding: 0px 5px;">11</li><li style="box-sizing: border-box; padding: 0px 5px;">12</li><li style="box-sizing: border-box; padding: 0px 5px;">13</li><li style="box-sizing: border-box; padding: 0px 5px;">14</li><li style="box-sizing: border-box; padding: 0px 5px;">15</li><li style="box-sizing: border-box; padding: 0px 5px;">16</li><li style="box-sizing: border-box; padding: 0px 5px;">17</li><li style="box-sizing: border-box; padding: 0px 5px;">18</li><li style="box-sizing: border-box; padding: 0px 5px;">19</li><li style="box-sizing: border-box; padding: 0px 5px;">20</li><li style="box-sizing: border-box; padding: 0px 5px;">21</li><li style="box-sizing: border-box; padding: 0px 5px;">22</li><li style="box-sizing: border-box; padding: 0px 5px;">23</li><li style="box-sizing: border-box; padding: 0px 5px;">24</li><li style="box-sizing: border-box; padding: 0px 5px;">25</li><li style="box-sizing: border-box; padding: 0px 5px;">26</li><li style="box-sizing: border-box; padding: 0px 5px;">27</li><li style="box-sizing: border-box; padding: 0px 5px;">28</li><li style="box-sizing: border-box; padding: 0px 5px;">29</li><li style="box-sizing: border-box; padding: 0px 5px;">30</li><li style="box-sizing: border-box; padding: 0px 5px;">31</li><li style="box-sizing: border-box; padding: 0px 5px;">32</li><li style="box-sizing: border-box; padding: 0px 5px;">33</li><li style="box-sizing: border-box; padding: 0px 5px;">34</li><li style="box-sizing: border-box; padding: 0px 5px;">35</li><li style="box-sizing: border-box; padding: 0px 5px;">36</li><li style="box-sizing: border-box; padding: 0px 5px;">37</li><li style="box-sizing: border-box; padding: 0px 5px;">38</li><li style="box-sizing: border-box; padding: 0px 5px;">39</li><li style="box-sizing: border-box; padding: 0px 5px;">40</li><li style="box-sizing: border-box; padding: 0px 5px;">41</li><li style="box-sizing: border-box; padding: 0px 5px;">42</li><li style="box-sizing: border-box; padding: 0px 5px;">43</li><li style="box-sizing: border-box; padding: 0px 5px;">44</li><li style="box-sizing: border-box; padding: 0px 5px;">45</li><li style="box-sizing: border-box; padding: 0px 5px;">46</li><li style="box-sizing: border-box; padding: 0px 5px;">47</li><li style="box-sizing: border-box; padding: 0px 5px;">48</li><li style="box-sizing: border-box; padding: 0px 5px;">49</li><li style="box-sizing: border-box; padding: 0px 5px;">50</li><li style="box-sizing: border-box; padding: 0px 5px;">51</li><li style="box-sizing: border-box; padding: 0px 5px;">52</li><li style="box-sizing: border-box; padding: 0px 5px;">53</li><li style="box-sizing: border-box; padding: 0px 5px;">54</li><li style="box-sizing: border-box; padding: 0px 5px;">55</li></ul>

輸出結果:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;">iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1.098744</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.294946</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">2000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.259301</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">3000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.248310</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">4000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.246170</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">5000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.245649</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">6000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.245491</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">7000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.245400</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">8000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.245335</span> iteration <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">9000</span>: loss <span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0.245292</span></code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li><li style="box-sizing: border-box; padding: 0px 5px;">6</li><li style="box-sizing: border-box; padding: 0px 5px;">7</li><li style="box-sizing: border-box; padding: 0px 5px;">8</li><li style="box-sizing: border-box; padding: 0px 5px;">9</li><li style="box-sizing: border-box; padding: 0px 5px;">10</li></ul>

現在的訓練準確度為:

<code class="language-python hljs has-numbering" style="display: block; padding: 0px; background-color: transparent; color: inherit; box-sizing: border-box; font-family: 'Source Code Pro', monospace;font-size:undefined; white-space: pre; border-top-left-radius: 0px; border-top-right-radius: 0px; border-bottom-right-radius: 0px; border-bottom-left-radius: 0px; word-wrap: normal; background-position: initial initial; background-repeat: initial initial;"><span class="hljs-comment" style="color: rgb(136, 0, 0); box-sizing: border-box;">#計算分類準確度</span> hidden_layer = np.maximum(<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">0</span>, np.dot(X, W) + b) scores = np.dot(hidden_layer, W2) + b2 predicted_class = np.argmax(scores, axis=<span class="hljs-number" style="color: rgb(0, 102, 102); box-sizing: border-box;">1</span>) <span class="hljs-keyword" style="color: rgb(0, 0, 136); box-sizing: border-box;">print</span> <span class="hljs-string" style="color: rgb(0, 136, 0); box-sizing: border-box;">'training accuracy: %.2f'</span> % (np.mean(predicted_class == y))</code><ul class="pre-numbering" style="box-sizing: border-box; position: absolute; width: 50px; background-color: rgb(238, 238, 238); top: 0px; left: 0px; margin: 0px; padding: 6px 0px 40px; border-right-width: 1px; border-right-style: solid; border-right-color: rgb(221, 221, 221); list-style: none; text-align: right;"><li style="box-sizing: border-box; padding: 0px 5px;">1</li><li style="box-sizing: border-box; padding: 0px 5px;">2</li><li style="box-sizing: border-box; padding: 0px 5px;">3</li><li style="box-sizing: border-box; padding: 0px 5px;">4</li><li style="box-sizing: border-box; padding: 0px 5px;">5</li></ul>

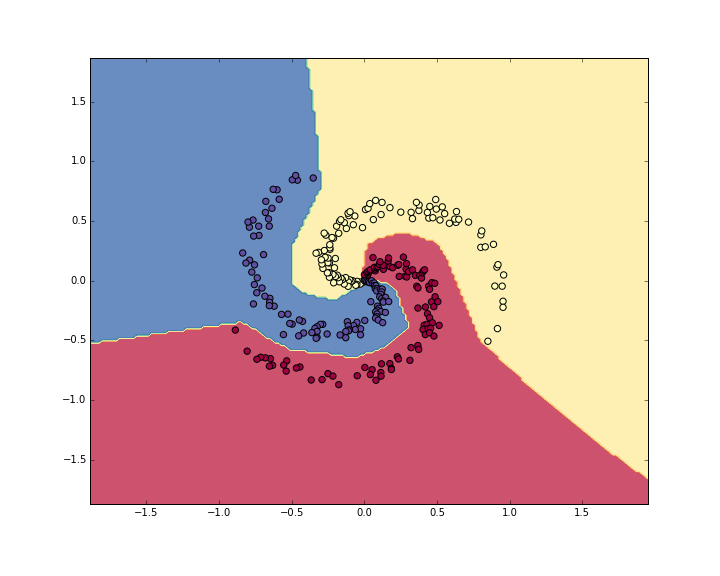

你猜怎麼著,準確度為98%,我們視覺化一下資料和現在的決策邊界:

看起來效果比之前好多了,這充分地印證了我們在手把手入門神經網路系列(1)_從初等數學的角度初探神經網路中提到的,神經網路對於空間強大的分割能力,對於非線性的不規則圖形,能有很強的劃分割槽域和區分類別能力。

相關文章

- 深度學習系列(2)——神經網路與深度學習深度學習神經網路

- 計算機視覺與深度學習公司計算機視覺深度學習

- 如何理解神經網路空間,深度學習在計算機視覺中的應用有哪些?神經網路深度學習計算機視覺

- AI之(神經網路+深度學習)AI神經網路深度學習

- 再聊神經網路與深度學習神經網路深度學習

- 深度學習與圖神經網路深度學習神經網路

- 【深度學習基礎-08】神經網路演算法(Neural Network)上--BP神經網路例子計算說明深度學習神經網路演算法

- 深度學習與CV教程(11) | 迴圈神經網路及視覺應用深度學習神經網路視覺

- 深度學習與圖神經網路學習分享:CNN 經典網路之-ResNet深度學習神經網路CNN

- 計算機視覺中的深度學習計算機視覺深度學習

- 計算機視覺與深度學習應用關係計算機視覺深度學習

- 深度學習與神經網路學習筆記一深度學習神經網路筆記

- 深度學習之step by step搭建神經網路深度學習神經網路

- 深度學習之RNN(迴圈神經網路)深度學習RNN神經網路

- 神經網路和深度學習神經網路深度學習

- 《神經網路和深度學習》系列文章七:實現我們的神經網路來分類數字神經網路深度學習

- 史丹佛—深度學習和計算機視覺深度學習計算機視覺

- 計算機視覺崗實習面經計算機視覺

- 動手學深度學習第十四課:實現、訓練和應用迴圈神經網路深度學習神經網路

- 《神經網路和深度學習》系列文章三十八:深度神經網路為何很難訓練?神經網路深度學習

- NLP與深度學習(二)迴圈神經網路深度學習神經網路

- 初探神經網路與深度學習 —— 感知器神經網路深度學習

- 【深度學習】神經網路入門深度學習神經網路

- 神經網路:提升深度學習模型的表現神經網路深度學習模型

- 深度學習經典卷積神經網路之AlexNet深度學習卷積神經網路

- 【深度學習篇】--神經網路中的卷積神經網路深度學習神經網路卷積

- 神經網路與深度學習 課程複習總結神經網路深度學習

- ImageNet冠軍帶你入門計算機視覺:卷積神經網路計算機視覺卷積神經網路

- 計算機視覺與深度學習,看這本書就夠了計算機視覺深度學習

- 【深度學習】1.4深層神經網路深度學習神經網路

- 深度學習教程 | 深層神經網路深度學習神經網路

- 深度學習三:卷積神經網路深度學習卷積神經網路

- 神經網路和深度學習(1):前言神經網路深度學習

- 深度學習技術實踐與圖神經網路新技術深度學習神經網路

- 《神經網路和深度學習》系列文章三十:如何選擇神經網路的超引數神經網路深度學習

- 深度學習與CV教程(4) | 神經網路與反向傳播深度學習神經網路反向傳播

- 吳恩達《神經網路與深度學習》課程筆記(4)– 淺層神經網路吳恩達神經網路深度學習筆記

- 吳恩達《神經網路與深度學習》課程筆記(5)– 深層神經網路吳恩達神經網路深度學習筆記