簡述:

View Code

View Code

這個階段為MPTCP的第一條子路徑建立情況的三次握手,因此此時建立的socket的屬性為master而非slave.

View Code

View Code

在TCP協議中影響資料傳送的三個因素分別為:傳送端視窗值、接收端視窗值和擁塞視窗值。

本文主要分析MPTCP中各個子路徑對接收端視窗值rcv_wnd的處理。

接收端視窗值的初始化

根據《MPTCP 原始碼分析(二) 建立子路徑》中描述服務端在傳送完SYN/ACK並接收到ACK的時候建立新的sock。

在核心實現中,針對連線請求分為兩個步驟處理:

- SYN佇列處理:當服務端收到SYN的時候,此連線請求request_sock將被存放於listening socket的SYN佇列,服務端傳送SYN/ACK並等待相應的ACK。

- accept佇列處理:一旦等待的ACK收到,服務端將會建立新的socket,並將連線請求從listening socket的SYN佇列移到其accept佇列。

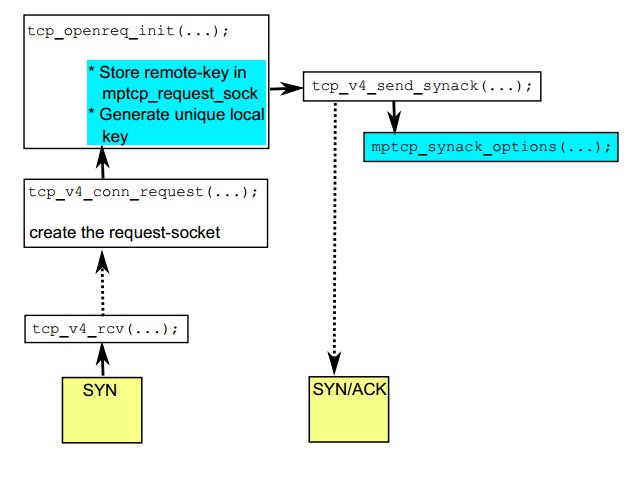

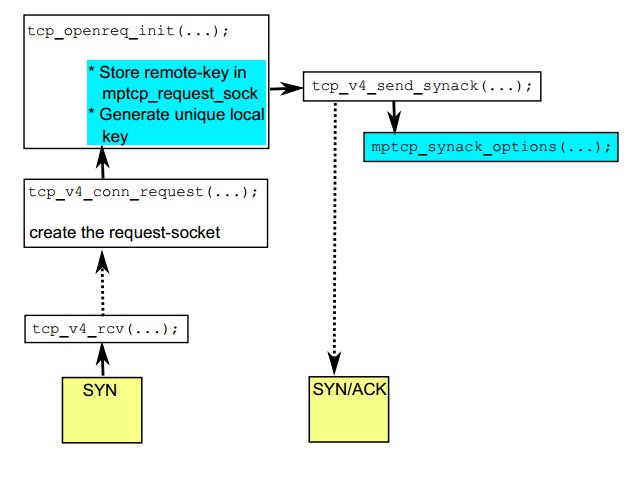

當服務端進入LINSTEN狀態後,收到第一個SYN包後的處理流程如下:

詳細的函式呼叫為:

tcp_v4_rcv

=》 tcp_v4_do_rcv

=》 tcp_rcv_state_process

=》mptcp_conn_request

=》tcp_v4_conn_request

=》tcp_conn_request

=》tcp_openreq_init

在函式tcp_conn_request中對連線請求request_sock進行了分配記憶體。

"net/ipv4/tcp_input.c" line 6097 of 6195 6097 req = inet_reqsk_alloc(rsk_ops); 6098 if (!req) 6099 goto drop;

在函式tcp_openreq_init中對request_sock進行了初始化操作。

1226 static inline void tcp_openreq_init(struct request_sock *req, 1227 struct tcp_options_received *rx_opt, 1228 struct sk_buff *skb) 1229 { 1230 struct inet_request_sock *ireq = inet_rsk(req); 1231 1232 req->rcv_wnd = 0; /* So that tcp_send_synack() knows! */ 1233 req->cookie_ts = 0; 1234 tcp_rsk(req)->rcv_isn = TCP_SKB_CB(skb)->seq; 1235 tcp_rsk(req)->rcv_nxt = TCP_SKB_CB(skb)->seq + 1; 1236 tcp_rsk(req)->snt_synack = 0; 1237 req->mss = rx_opt->mss_clamp; 1238 req->ts_recent = rx_opt->saw_tstamp ? rx_opt->rcv_tsval : 0; 1239 ireq->tstamp_ok = rx_opt->tstamp_ok; 1240 ireq->sack_ok = rx_opt->sack_ok; 1241 ireq->snd_wscale = rx_opt->snd_wscale; 1242 ireq->wscale_ok = rx_opt->wscale_ok; 1243 ireq->acked = 0; 1244 ireq->ecn_ok = 0; 1245 ireq->mptcp_rqsk = 0; 1246 ireq->saw_mpc = 0; 1247 ireq->ir_rmt_port = tcp_hdr(skb)->source; 1248 ireq->ir_num = ntohs(tcp_hdr(skb)->dest); 1249 }

第1232行對request_sock的rcv_wnd進行了初始化為0。

當服務端收到ACK的時候就會建立相應的socket。將會呼叫tcp_create_openreq_child函式實現,定義如下:

"include/net/tcp.h" line 578 of 1787 struct sock *tcp_create_openreq_child(struct sock *sk, struct request_sock *req, struct sk_buff *skb);

對於rcv_wnd的處理具體如下:

"net/ipv4/tcp_minisocks.c" line 441 of 872 512 newtp->window_clamp = req->window_clamp; 513 newtp->rcv_ssthresh = req->rcv_wnd; 514 newtp->rcv_wnd = req->rcv_wnd; 515 newtp->rx_opt.wscale_ok = ireq->wscale_ok;

這個階段為MPTCP的第一條子路徑建立情況的三次握手,因此此時建立的socket的屬性為master而非slave.

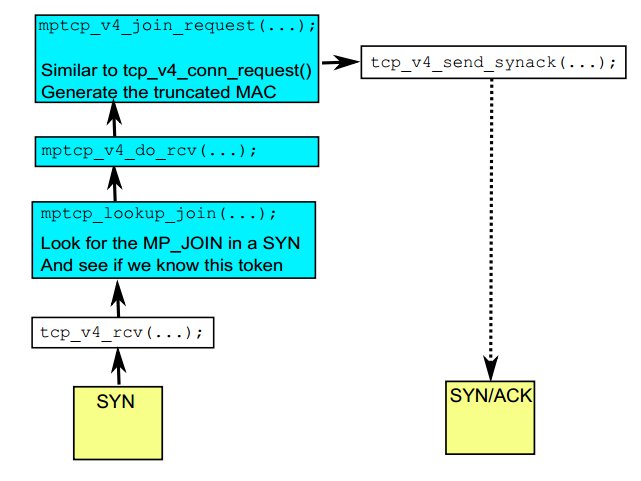

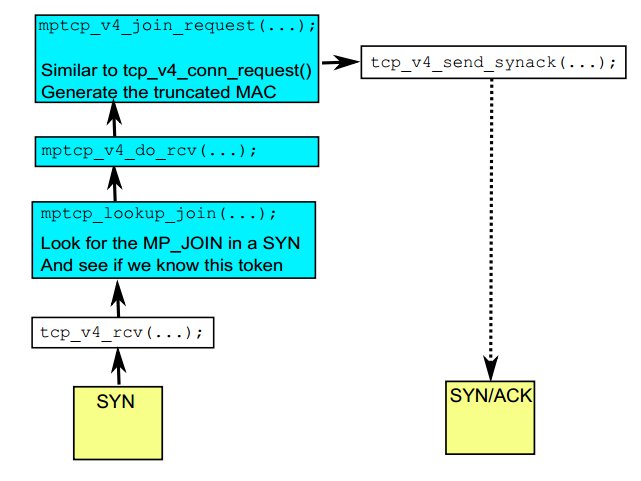

下面的情景為建立一條子路徑的情況,當服務端收到第一個SYN包的函式呼叫情況如下:

函式mptcp_v4_join_request將會對連線請求request_sock進行記憶體分配並初始化。具體的呼叫如下:

mptcp_v4_join_request

=》tcp_conn_request

=》inet_reqsk_alloc

=》tcp_openreq_init

當客戶端的ACK到達後,核心會將此連線請求request_sock的rcv_wnd賦值給slave subsocket.

master sock 和 slave sock之間接收端視窗值的關係

TCP在發包的時候會告訴對方自身的接收端視窗值。MPTCP的實現如下:

"net/mptcp/mptcp_output.c" line 992 of 1667 992 u16 mptcp_select_window(struct sock *sk) 993 { 994 u16 new_win = tcp_select_window(sk); 995 struct tcp_sock *tp = tcp_sk(sk); 996 struct tcp_sock *meta_tp = mptcp_meta_tp(tp); 997 998 meta_tp->rcv_wnd = tp->rcv_wnd; 999 meta_tp->rcv_wup = meta_tp->rcv_nxt; 1000 1001 return new_win; 1002 }

第994獲得最新的視窗值並返回。第998行將slave sock的rcv_wnd賦值給master sock。

第994行的函式tcp_select_window的實現如下:

"net/ipv4/tcp_output.c" line 275 of 3327 275 u16 tcp_select_window(struct sock *sk) 276 { 277 struct tcp_sock *tp = tcp_sk(sk); 278 /* The window must never shrink at the meta-level. At the subflow we 279 * have to allow this. Otherwise we may announce a window too large 280 * for the current meta-level sk_rcvbuf. 281 */ 282 u32 cur_win = tcp_receive_window(mptcp(tp) ? tcp_sk(mptcp_meta_sk(sk)) : tp); 283 u32 new_win = tp->__select_window(sk);

對於第283行的__select_window()函式,MPTCP的核心實現如下:

"net/mptcp/mptcp_output.c" line 771 of 1667 771 u32 __mptcp_select_window(struct sock *sk) 772 { 773 struct inet_connection_sock *icsk = inet_csk(sk); 774 struct tcp_sock *tp = tcp_sk(sk), *meta_tp = mptcp_meta_tp(tp); 775 struct sock *meta_sk = mptcp_meta_sk(sk); 776 int mss, free_space, full_space, window; 777 778 /* MSS for the peer's data. Previous versions used mss_clamp 779 * here. I don't know if the value based on our guesses 780 * of peer's MSS is better for the performance. It's more correct 781 * but may be worse for the performance because of rcv_mss 782 * fluctuations. --SAW 1998/11/1 783 */ 784 mss = icsk->icsk_ack.rcv_mss; 785 free_space = tcp_space(meta_sk); 786 full_space = min_t(int, meta_tp->window_clamp, 787 tcp_full_space(meta_sk)); 788 789 if (mss > full_space) 790 mss = full_space; 791 792 if (free_space < (full_space >> 1)) { 793 icsk->icsk_ack.quick = 0; 794 795 if (tcp_memory_pressure) 796 /* TODO this has to be adapted when we support different 797 * MSS's among the subflows. 798 */ 799 meta_tp->rcv_ssthresh = min(meta_tp->rcv_ssthresh, 800 4U * meta_tp->advmss); 801 802 if (free_space < mss) 803 return 0; 804 } 805 806 if (free_space > meta_tp->rcv_ssthresh) 807 free_space = meta_tp->rcv_ssthresh; 808 809 /* Don't do rounding if we are using window scaling, since the 810 * scaled window will not line up with the MSS boundary anyway. 811 */ 812 window = meta_tp->rcv_wnd; 813 if (tp->rx_opt.rcv_wscale) { 814 window = free_space; 815 816 /* Advertise enough space so that it won't get scaled away. 817 * Import case: prevent zero window announcement if 818 * 1<<rcv_wscale > mss. 819 */ 820 if (((window >> tp->rx_opt.rcv_wscale) << tp-> 821 rx_opt.rcv_wscale) != window) 822 window = (((window >> tp->rx_opt.rcv_wscale) + 1) 823 << tp->rx_opt.rcv_wscale); 824 } else { 825 /* Get the largest window that is a nice multiple of mss. 826 * Window clamp already applied above. 827 * If our current window offering is within 1 mss of the 828 * free space we just keep it. This prevents the divide 829 * and multiply from happening most of the time. 830 * We also don't do any window rounding when the free space 831 * is too small. 832 */ 833 if (window <= free_space - mss || window > free_space) 834 window = (free_space / mss) * mss; 835 else if (mss == full_space && 836 free_space > window + (full_space >> 1)) 837 window = free_space; 838 } 839 840 return window; 841 }

影響window的計算的因素為:

- 收到的MSS( icsk->icsk_ack.rcv_mss)

- 套接字緩衝區總的空間(tcp_full_space)

- 套接字緩衝區的空閒空間(tcp_space)

- meta_tp->rcv_ssthresh /* Current window clamp */

觀察上面的程式碼可以知道MPTCP的實現和__tcp_select_window的區別是都是依據meta_tp,而非tp。這說明

master sock 和 其餘slave sock使用相同的 rcv_wnd。

結論:

1.master sock 和 其餘slave sock使用相同的接收緩衝區和 rcv_wnd。